AI-powered image generation models are advancing at a rapid rate, but it’s still common for them to churn out questionable images. Since it’s easy to assume that the human prompts are the problem, I decided to test if AI has an easier time working exclusively with AI-generated prompts.

Rules of the Experiment

When AI image generation models hit the scene a few years ago, we all thought it would toll a bell for all the people working with visual media. This didn’t end up being the case, though. Despite being able to generate hyper-realistic photos, AI images would often fall into the category of unpredictable, especially if you required something a bit more complex (AI tends to struggle with hands, for example).

You can either blame AI models themselves for this issue or the fallacy of humans and our inconsistent prompting skills. The natural way to test who’s to blame is to see if image generation models deliver better results if you input generated prompts.

Related

I Used AI to Recreate Old Photos: The Results Were Surprising

Can AI give us new perspectives on historic moments?

To test this hypothesis, I’ll use Gemini to create a series of prompts that avoid using the name of the object or the photograph I’m trying to generate. This will help check how well AI “reads” instructions. Granted, there is still a possibility that the model will heavily pull inspiration from the data it was trained on (particularly when recreating existing photographs), but it is what it is, as the kids say.

My tool of choice for generating images will be Bing (yes, Bing still exists) Image Creator, which is based on DALL-E 3. To put the model through its paces, I’ll start with simple shapes, and move to more complex images as the experiment progresses.

If you’ve used ChatGPT and the likes, you’re already aware of just how superfluous some of its answers can be, and it wasn’t any different with the prompts the model spit out to me during my “trial” run. Thus, I decided to limit myself to 500 characters in order to keep the prompts consistent.

How AI Does With Simple Shapes

Let’s start with a simple square. I asked Gemini to describe a square without referring to it by its name and it came up with this:

“A four-sided shape with all sides of equal length. Each internal angle measures exactly 90 degrees. It’s a regular quadrilateral with parallel opposing sides.”

After plugging the description into DALL-E, I got these results:

It’s a square, alright, although I think it went overboard with geometry. Time to bump up the difficulty, so I asked AI to detail a cube.

“A three-dimensional shape with six identical faces. Each face is a regular quadrilateral with four equal sides and four right angles. It has twelve edges of equal length and eight vertices. All angles within the shape are right angles.”

The results are surprising:

Remember what we said about AI models being unpredictable? Well, here, DALL-E did generate a cube, but it got a bit confused and made it a Rubik’s cube. Despite avoiding the exact word like a plague, AI got it partially wrong – chalk it up to the popularity of the nerdy Hungarian toy.

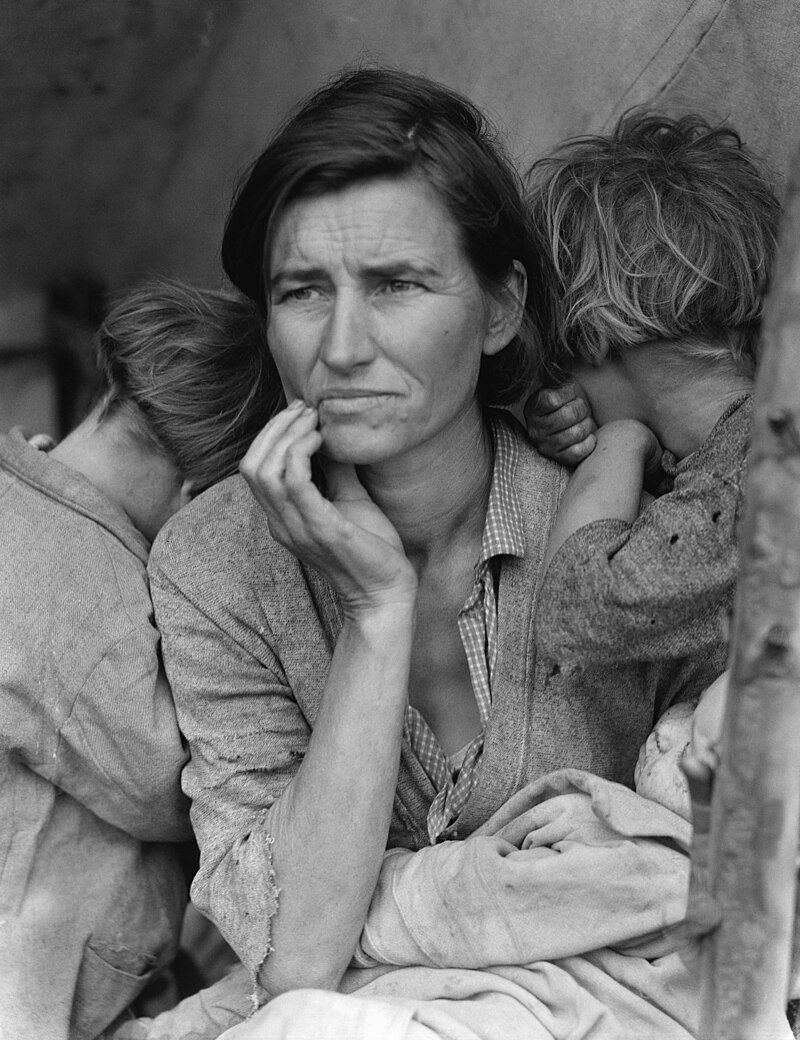

AI’s Take on Photography With Human Subjects

The cube situation shows that even with a detailed “impartial” description, AI can still misinterpret fairly direct instructions. So, let’s see how well it does with AI-generated descriptions of classic images, such as Dorothea Lange’s “Migrant Mother”. Here’s the original:

“A woman, her face etched with worry, looks off-camera. She is surrounded by her children, their faces hidden or turned away. Her hand rests near her face, conveying exhaustion and anxiety. The scene suggests poverty and hardship. The woman’s clothing is worn, and the overall composition is somber, emphasizing the weight of her circumstances.”

This is DALL-E’s interpretation of the famous photo:

Close enough! Not spot on, as DALL-E clearly ignored the “surrounded by her children, their faces hidden or turned away” part and instead of the “mother” resting her hand near her face, one of the children has taken over the role.

Let’s try something more complex. You’ve probably seen the iconic “Lunch atop a Skyscraper”:

“Eleven men sit on a steel beam, high in the air. They eat lunch, legs dangling. The beam is suspended above a sprawling city. The men appear relaxed, despite the extreme height. They wear work clothes, and the scene is captured from a slightly low angle, emphasizing the height.”

This masterful prompt yielded masterful results:

Once you disregard the classic signs of an AI image (identical bowls and “copied and pasted” subjects), it’s almost uncanny in terms of composition and the overall vibe. Not surprising, though—not only is this image super popular, but it’s also in the public domain, so I have a sneaking suspicion DALL-E already regurgitated its contents during training.

Can AI Handle Complex Photos?

Since this is the last “test” in the experiment, gloves are off! While AI is good with human subjects, it generally falls apart when faced with complex and more “cryptic” scenes. So how about the iconic “Earthrise” taken from the lunar orbit on Apollo 8?

“A partially illuminated sphere hangs in a dark void. A smaller, gray-toned sphere rises above its horizon. The larger sphere displays mottled blues and whites, suggesting water and clouds. The stark contrast between the two spheres and the blackness emphasizes the fragility and isolation of the smaller, rising sphere”.

Gemini really dropped the ball (or should I say sphere) with this description. Considering this is too abstract, I added the phrase “taken from a close lunar orbit” into the prompt, but it didn’t help much:

It’s a great progressive rock album cover, but doesn’t have anything to do with “Earthrise”. To end the experiment, I’ve chosen the most obscure photograph so far, industrial masterpiece “Armco Steel” by Edward Weston:

“A series of rounded, metallic industrial tanks fill the frame. Their forms are smooth and bulbous, creating a repetitive pattern. Light reflects off the surfaces, highlighting their curved shapes and creating a sense of volume. The composition emphasizes the abstract qualities of the industrial objects, focusing on form and texture rather than their function. The scene is stark and minimalist, with a strong emphasis on light and shadow.”

Seems like a good prompt, let’s see if Dall-E agrees:

While I appreciate the sci-fi vibes, it doesn’t look anything like the original. I didn’t want to end the experiment with a colossal failure, so I decided to help the machine by adding the term “1920s photograph” at the end of the prompt.

My thinking was that the particular term may help clarify which image I’m referring to. Unfortunately, AI let me down once more and created another prog rock album cover:

The results of this experiment were interesting, and the conclusion we can make is that AI image generation is super unpredictable, especially with more abstract concepts. It doesn’t matter if the prompt is AI-generated and precise, or human and imperfect—the results are seemingly random.

So, the next time you try to blame yourself and your prompting game, remember that the results would probably be pretty much the same even if it was two machines communicating with one another.