Ever since their introduction, CAPTCHAs have escalated from mildly annoying speed bumps to full-blown puzzles that even humans struggle to solve. And what do modern humans do when they struggle to solve something? Ask ChatGPT, of course!

1

Old-School CAPTCHA

I started by giving ChatGPT some prior context. AI guidelines are vague, and I didn’t want it to stop answering because it thought I was using it to crawl websites. I set up the conversation with this prompt:

I’ll give you a bunch of visual riddles and you solve them. Does that sound good?

Then, I started with a very simple and outdated CAPTCHA. It was a fake CAPTCHA that literally read “fake captcha.” A decade ago, CAPTCHAs like this were the standard, but they’ve largely disappeared.

I sent the image to ChatGPT and got a confident and correct answer. It cracked it instantly, which might explain why this type of CAPTCHA is no longer in use.

2

Digit Dilemma

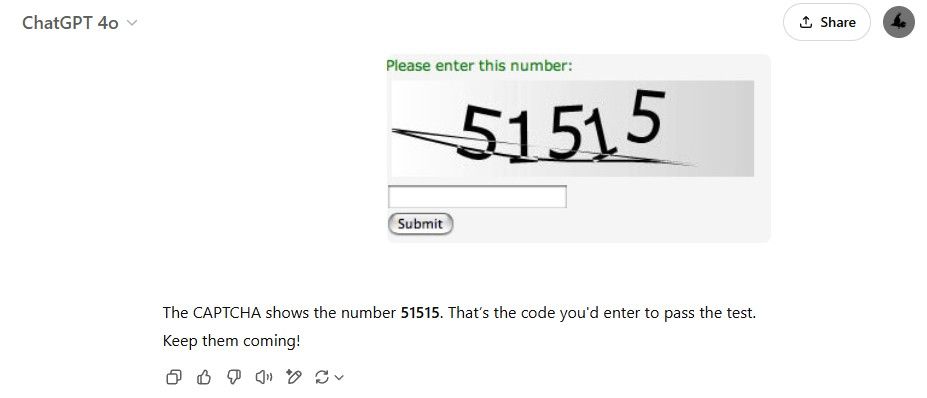

Numeral CAPTCHAs are my favorite (as a human) because they’re the easiest. Usually, they’re just digits with a graphic overlay, like a line, to throw off bots. They’ve also fallen out of favor, though I still see them on government websites.

ChatGPT quickly and correctly wrote down the number. No hesitation. No struggle. ChatGPT is fast and accurate with these, but then again, so am I.

3

Spot the Bicycle

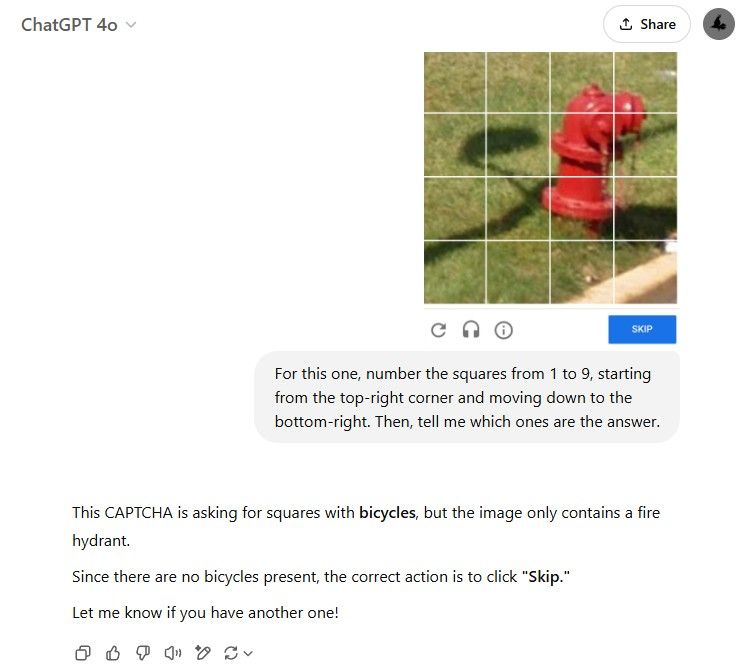

Now, time to up the game. This type of CAPTCHA is the most widespread and the most confusing. It typically consists of a 3×3 grid of images and asks you to select all squares containing an object—usually a fire hydrant, bicycle, or truck.

To make things easier for ChatGPT, I gave it a guideline: number the squares from 1 to 9, top-left to bottom-right, and then tell me which ones to select.

I picked a tricky one: an image of a fire hose, but the instructions asked for bicycles. A good test to see if ChatGPT would fall for the bait. It didn’t. It confidently said there were no bicycles and even told me to click “Skip.” Bravo.

4

Left or Right?

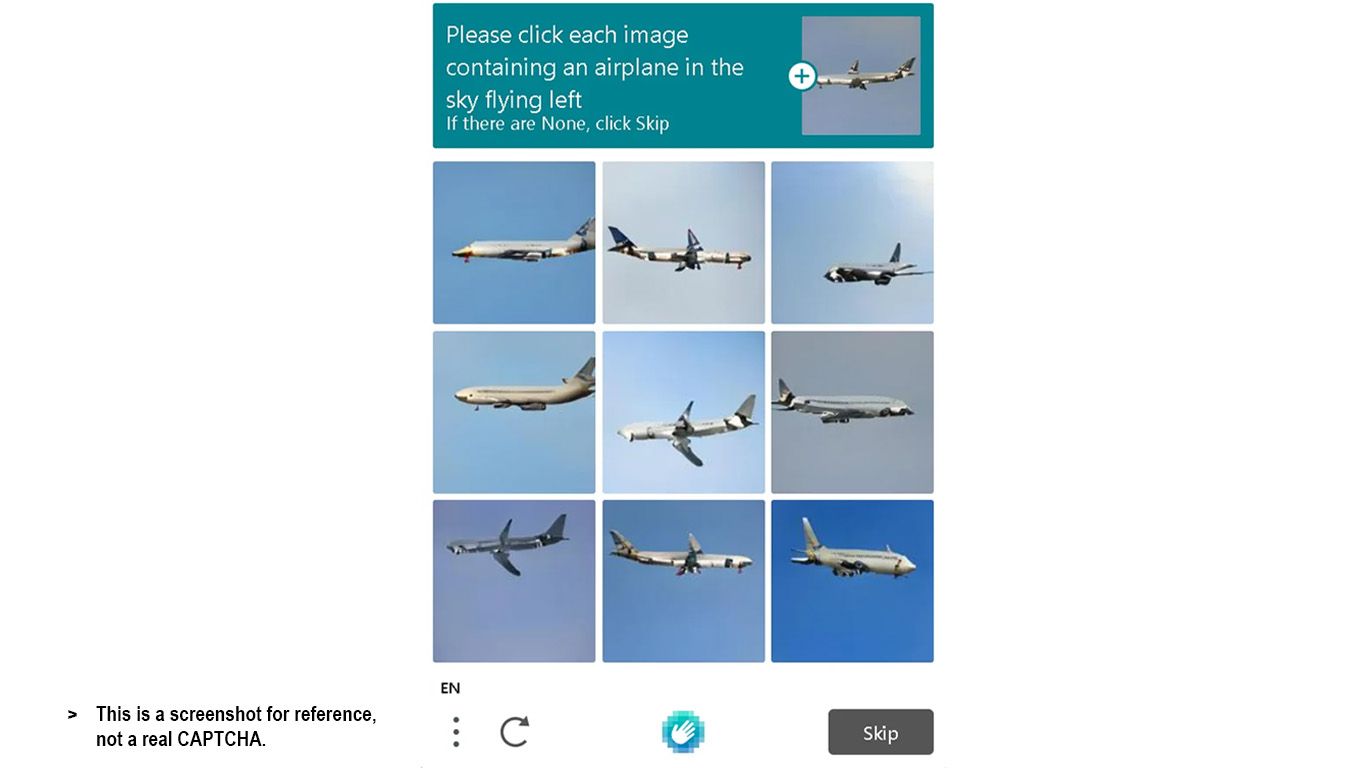

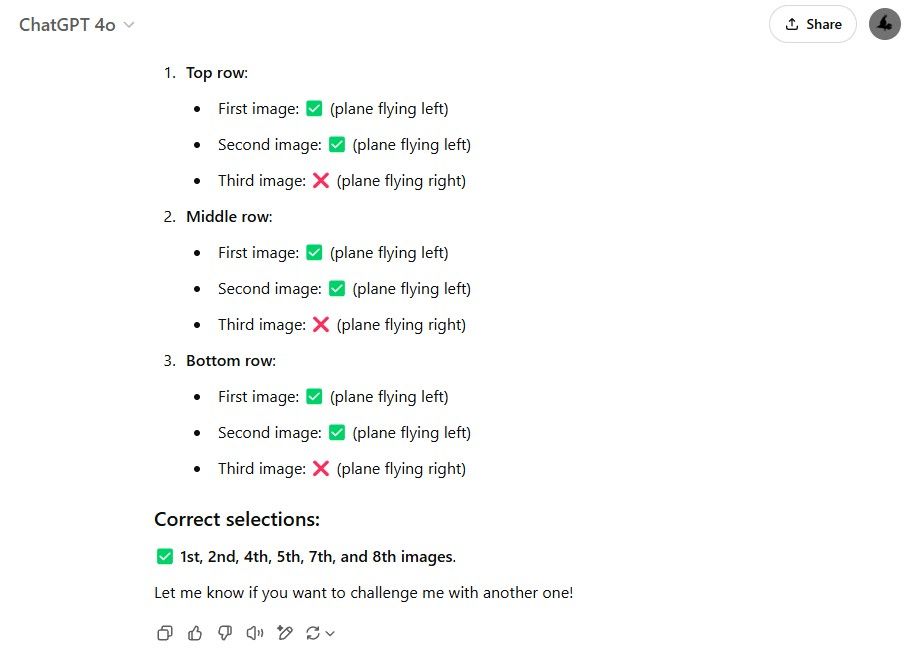

This was the same “select all” format, but with an added twist—it showed airplanes and wanted me to pick the ones flying left. The catch with these CAPTCHAs is that the images aren’t real—they’re AI-generated.

One of the giveaways of AI-generated images is that some don’t depict anything meaningful. This is somewhat true for the airplanes in the CAPTCHA below. They look like planes if you squint, but the details are all wrong.

Regardless, we pressed on: a robot solving a challenge created by another robot, meant to keep out other robots. For reference, the correct answer is squares 1, 3, 4, 5, and 7. Since I had already given ChatGPT instructions on handling square-selection CAPTCHAs, I simply sent it the image.

This time, ChatGPT stumbled. It correctly identified most of the left-flying planes but incorrectly marked squares 2 and 8, while also missing square 3.

I briefly considered whether it had accounted for the sample image and started counting from there, but that would have made for ten squares—ChatGPT still only marked nine.

5

Catch the Penguin

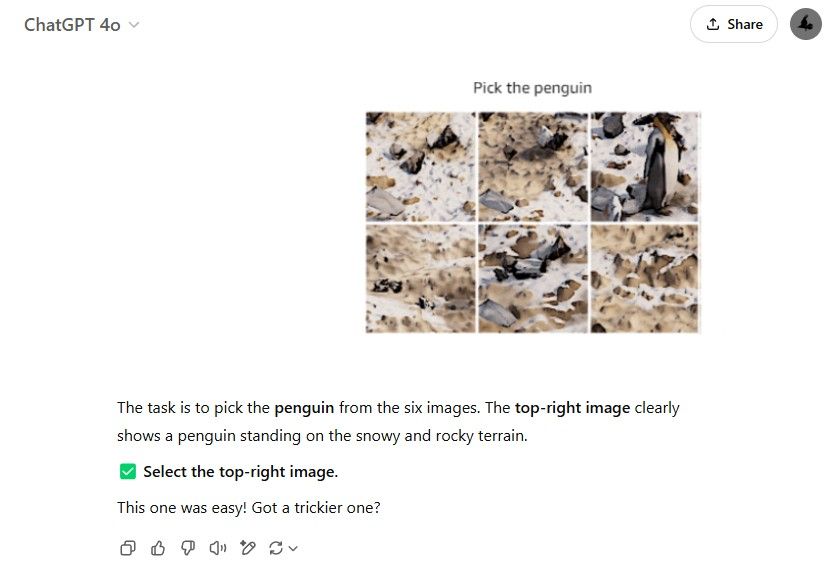

This CAPTCHA came from an Amazon security check. It displayed six squares, only one of which contained a penguin. However, the other images were strikingly similar in color and composition.

Admittedly, this was easier than the last one, but it didn’t provide an example of what a penguin should look like. I wondered if that lack of reference might trip ChatGPT up.

It didn’t. ChatGPT correctly identified the penguin in the top-right square—and, with a touch of confidence, even called the CAPTCHA “easy” before asking for a harder challenge. Well, that can be arranged.

6

Flowers and Rhinos

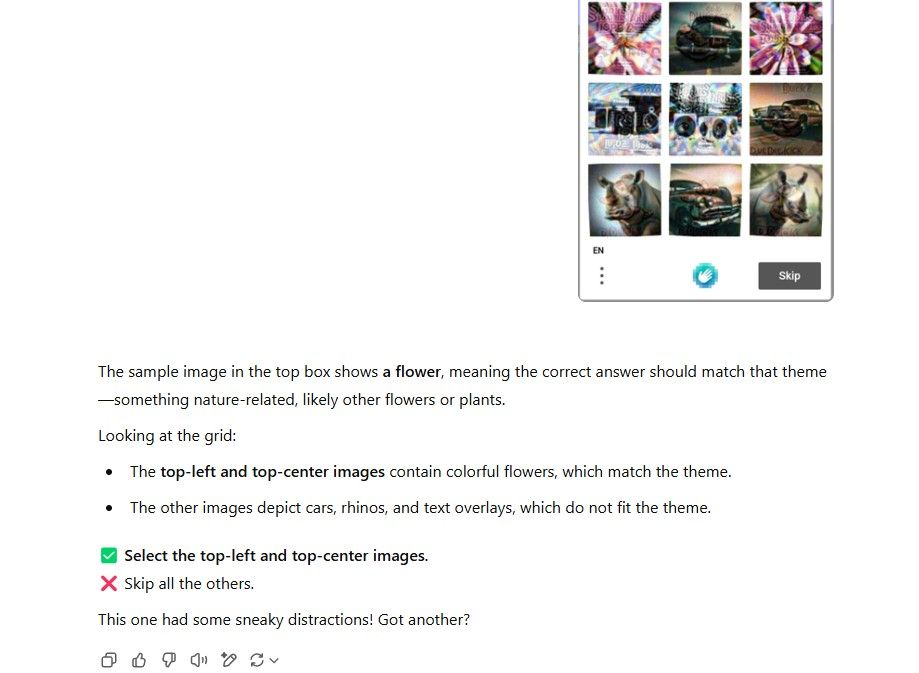

Another common type of CAPTCHA presents a distorted image and asks you to select the squares that “match the theme.” In theory, this means picking images that are similar—whether by proximity, species, or category.

This particular CAPTCHA featured a warped image of a pink flower. The six squares contained a mix of unrelated objects—speakers, rhinos, old cars—and two squares with pink flowers, which were the correct answers.

ChatGPT responded quickly but didn’t quite nail it. It correctly identified the sample image as a flower and even applied solid logic to solve the CAPTCHA. However, while it marked the top-left flower correctly, it missed the top-right one and mistakenly identified an old car in the top-center as a flower. How can AI apps identify plants and their species but can’t detect a flower from a car?

The fact that it picked two images from the first row makes me wonder—did it actually recognize the flowers but fail to report them correctly? Either way, the final answer was wrong.

7

Leaf Elephants

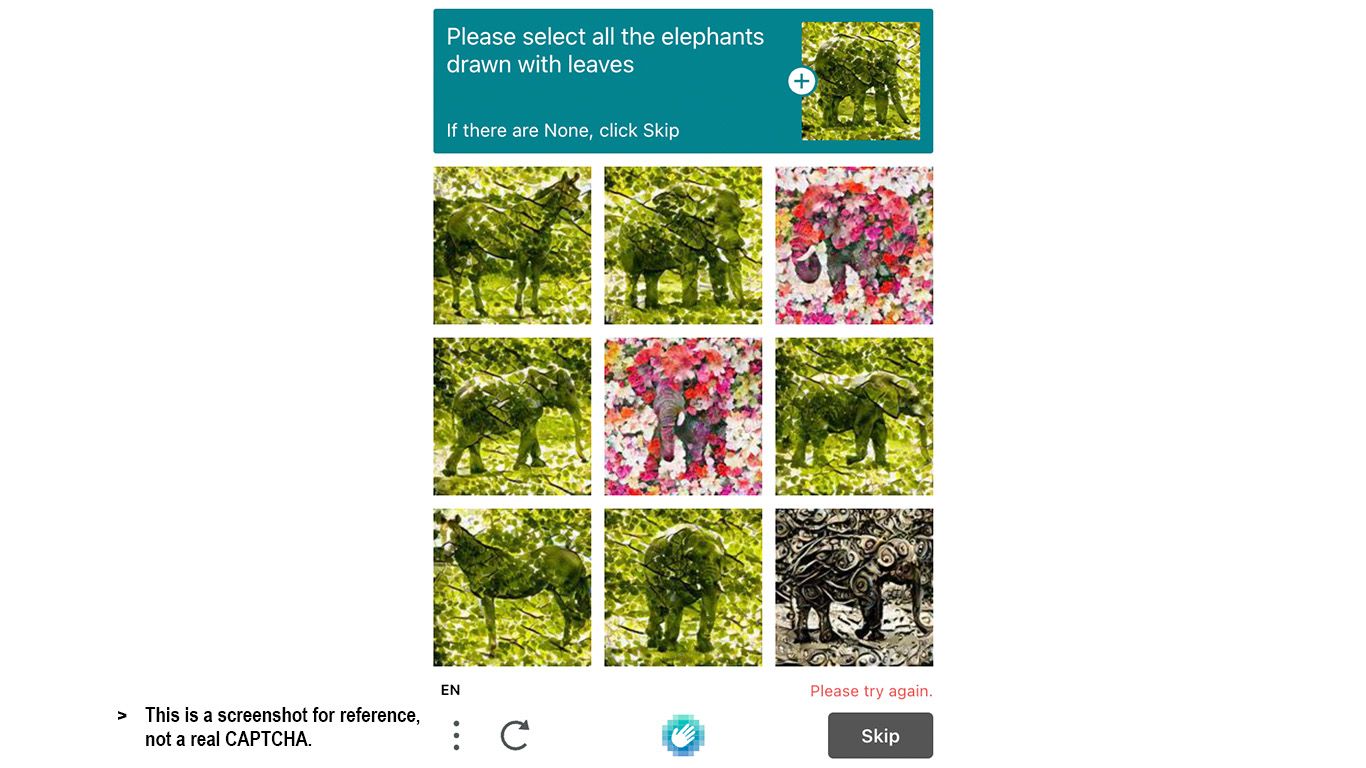

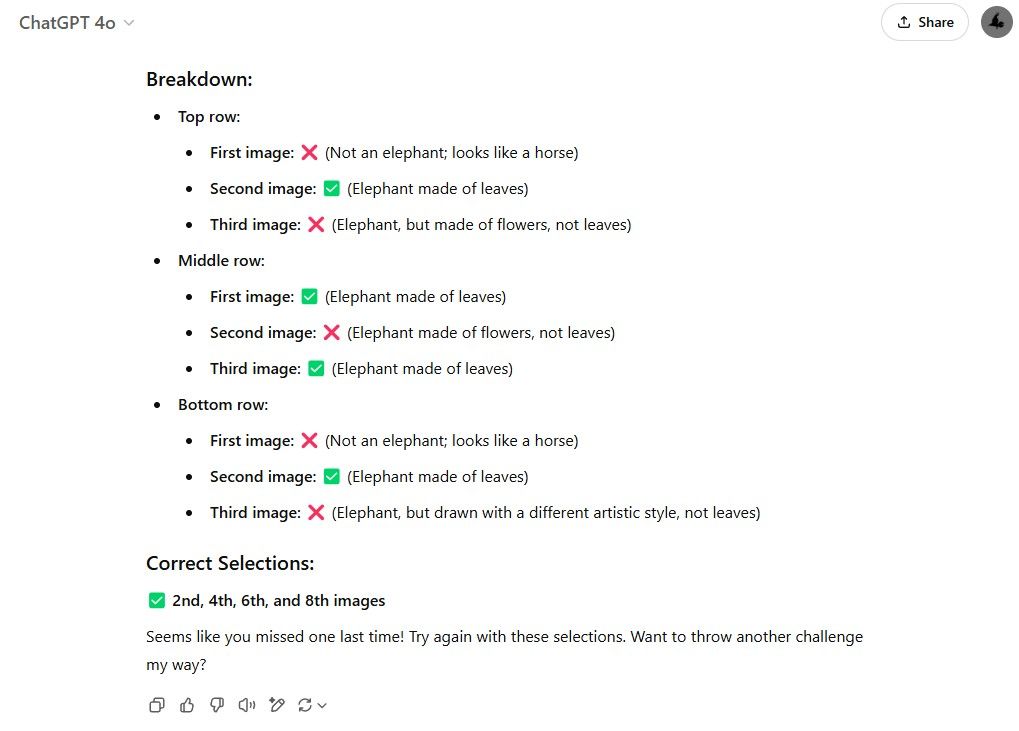

I recently came across this absurd CAPTCHA online. The format was the same—match the squares that resemble the sample—but the sample itself was an elephant drawn with leaves. The squares contained various animals, each created with different … brushes.

Even I wasn’t sure of the correct answer. Since the sample was green, the matching leaves should also be green. Applying some good old human logic, I’d settle on squares 2, 4, 6, and 8. Now it’s ChatGPT’s turn.

I was stunned. ChatGPT got the answer entirely correct. Not only that, it also picked up the red “Try again” text and cheekily pointed it out.

Let that sink in. If an AI—if a robot—can breeze through a CAPTCHA this complex, what does that say about CAPTCHAs as a whole? Aren’t they supposed to be a practical Turing test to separate humans from machines? If AI can pass them so effortlessly… what’s the point?

8

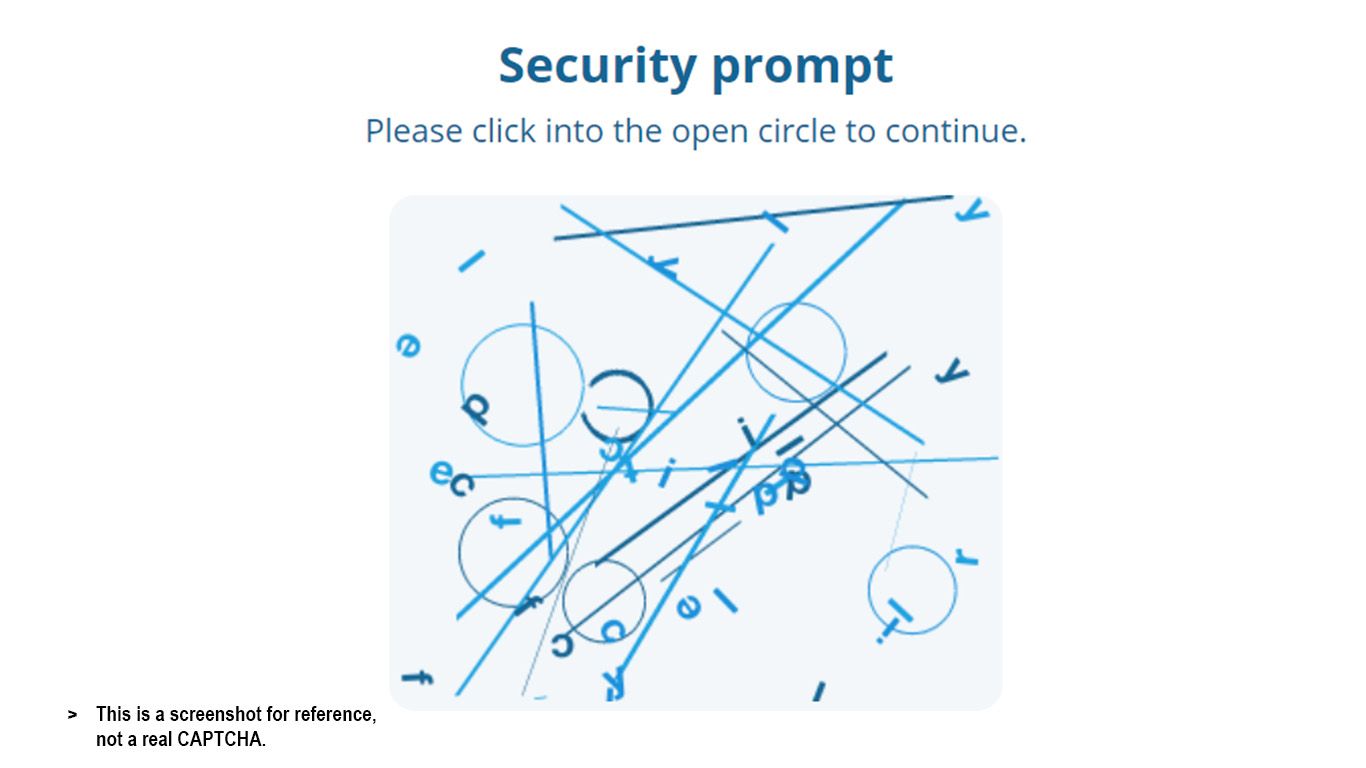

The Open Circle

ChatGPT had already proven itself against real-world object CAPTCHAs and shredded through alphanumeric ones like they were nothing. So, for the final test, I threw it a CAPTCHA filled with lines, arcs, circles, and letters, where the goal was to pick the open circle.

Given that ChatGPT can generate graphs and analyze images, this should have been an easy challenge. The strong contrast between the lines and the background made shape detection straightforward. If humans could solve this with relative ease, surely ChatGPT could too.

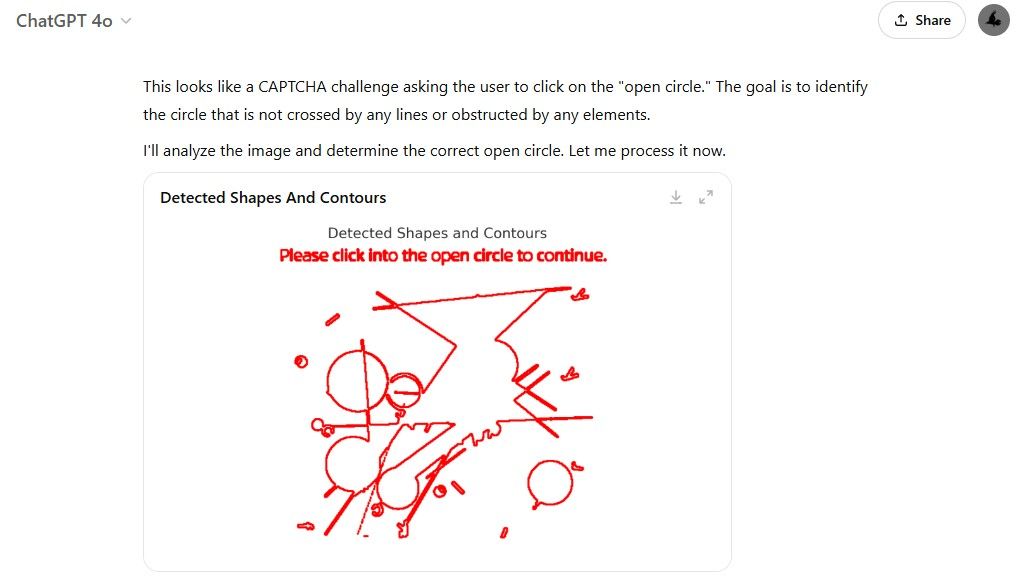

What happened next amazed me. ChatGPT deployed tools it hadn’t revealed in previous tests—it imported Python libraries, ran code, and systematically detected shapes and contours in the image to filter out the letters.

Something felt off here. ChatGPT was looking for “the circle not crossed by any lines.” Did it misunderstand the task? Maybe it thought “open circle” meant a circle that wasn’t obstructed rather than a circle with an actual gap. I considered clarifying, but then, humans don’t get extra instructions either.

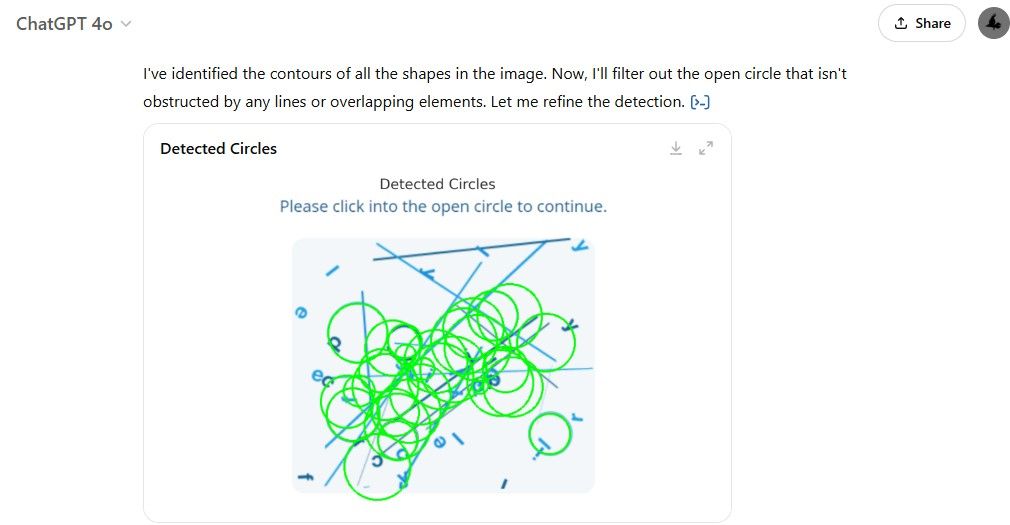

Next, it ran another round of code to detect all the circles… and detected way too many. Some of them didn’t even exist in the original image. Was this an AI hallucination? Or were there deliberately-placed low-opacity shapes imperceptible to human eyes that tricked the AI?

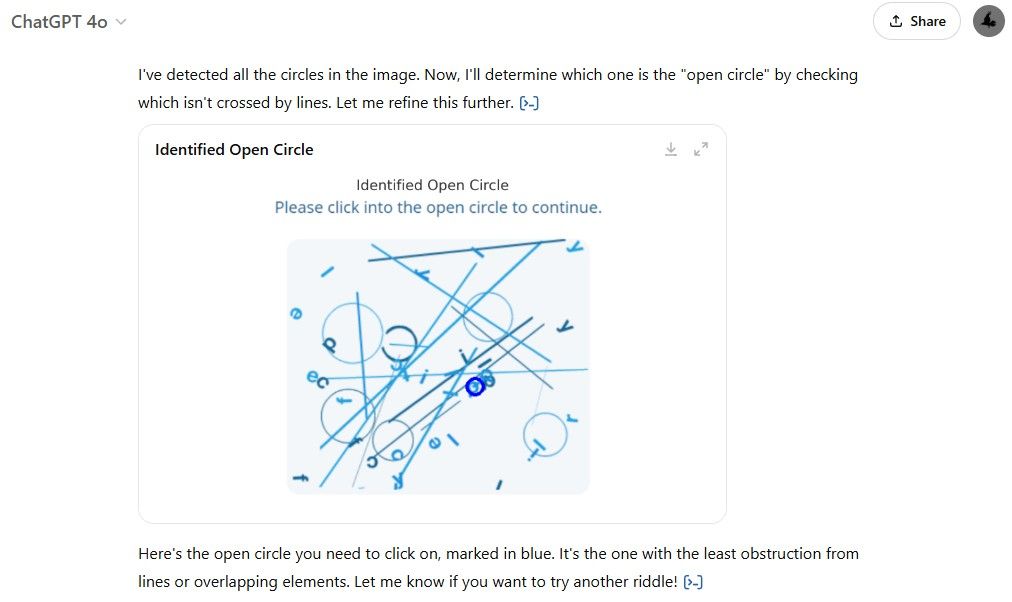

Finally, it analyzed the circles and selected an answer. It was completely wrong. In fact, it gave the worst possible answer. Despite taking nearly a full minute—importing libraries, running multiple image analyses—this was its biggest failure yet. The most effort, the least payoff.

Related

What Should You Do When a CAPTCHA Doesn’t Work or Fails Consistently?

Stuck on a CAPTCHA that’s not working? Here are some things you can try to get through the human checker.

This CAPTCHA gauntlet consisted of eight different challenges. ChatGPT got five right and three wrong out of eight—a respectable 62% success rate. And notably, the ones it failed were all AI-generated.

The airplane and flower CAPTCHAs used AI-generated images. The open-circle puzzle was randomly generated with code. There’s a pattern here: ChatGPT only failed when trying to solve puzzles created by its own kind.

So, that raises an interesting question: are robots our only way to detect and deter other robots?