Running an AI model without an internet connection sounds brilliant but typically requires powerful, expensive hardware. However, that’s not always the case: DeepSeek’s R1 model is a useful option for lower-powered devices—and it’s also surprisingly easy to install.

What Does Running a Local AI Chatbot Mean?

When you use online AI chatbots like ChatGP, your requests are processed on OpenAI’s servers, meaning your device isn’t doing the heavy lifting. You need a constant internet connection to communicate with the AI chatbots, and you’re never in complete control of your data. The large language models that power AI chatbots, like ChatGPT, Gemini, Claude, and so on, are extremely demanding to run since they rely on GPUs with lots of VRAM. That’s why most AI models are cloud-based.

A local AI chatbot is installed directly on your device, like any other software. That means you don’t need a constant internet connection to use the AI chatbot and can fire off a request anytime. DeepSeek-R1 is a local LLM that can be installed on many devices. Its distilled 7B model (seven billion parameters) is a smaller, optimized version that works well on mid-range hardware, letting me generate AI responses without cloud processing. In simple terms, this means faster responses, better privacy, and full control over my data.

How I Installed DeepSeek-R1 on My Laptop

Running DeepSeek-R1 on your device is fairly simple, but keep in mind that you’re using a less powerful version than DeepSeek’s web-based AI chatbot. DeepSeek’s AI chatbot uses around 671 billion parameters, while DeepSeek-R1 has around 7 billion.

You can download and use DeepSeek-R1 on your computer by following these steps:

- Go to Ollama’s website and download the latest version. Then, install it on your device like any other application.

-

Open the Terminal, and type in the following command:

ollama run deepseek-r1:7b

This will download the 7B DeepSeek-R1 model to your computer, allowing you to enter queries in the Terminal and receive responses. If you experience performance issues or crashes, try using a less demanding model by replacing 7b with 1.5b in the above command.

While the model works perfectly fine in the Terminal, if you want a full-featured UI with proper text formatting like ChatGPT, you can also use an app like Chatbox.

Running DeepSeek Locally Isn’t Perfect—But It Works

As mentioned earlier, the responses won’t be as good (or as fast!) as those from DeepSeek’s online AI chatbot since it uses a more powerful model and processes everything in the cloud. But let’s see how well the smaller models perform.

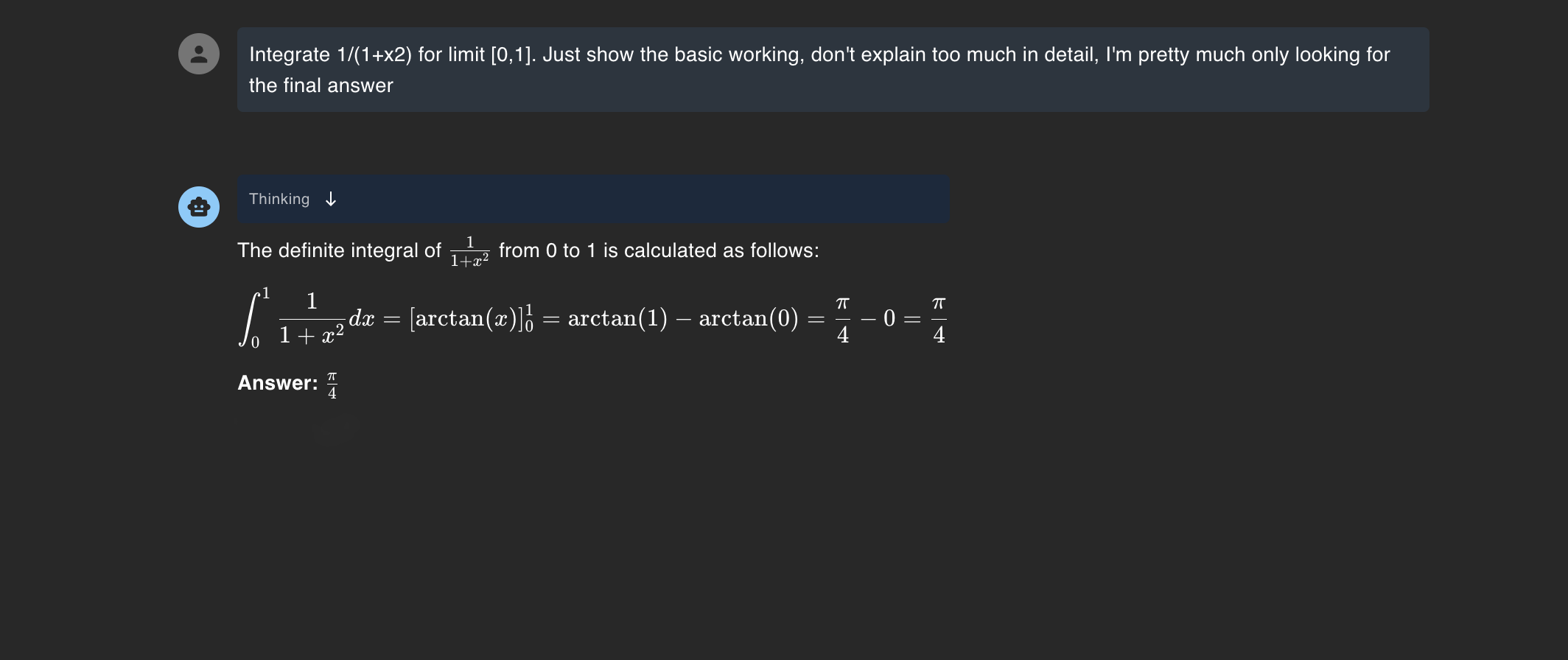

Solving Math Problems

To test the performance of the 7B parameter model, I gave it an equation and asked it to solve its integral. I was pretty happy with how well it performed, especially since basic models often struggle with math.

Now, I’ll admit this isn’t the most complicated question, but that’s exactly why running an LLM locally is so useful. It’s about having something readily available to handle simple queries on the spot rather than relying on the cloud for everything.

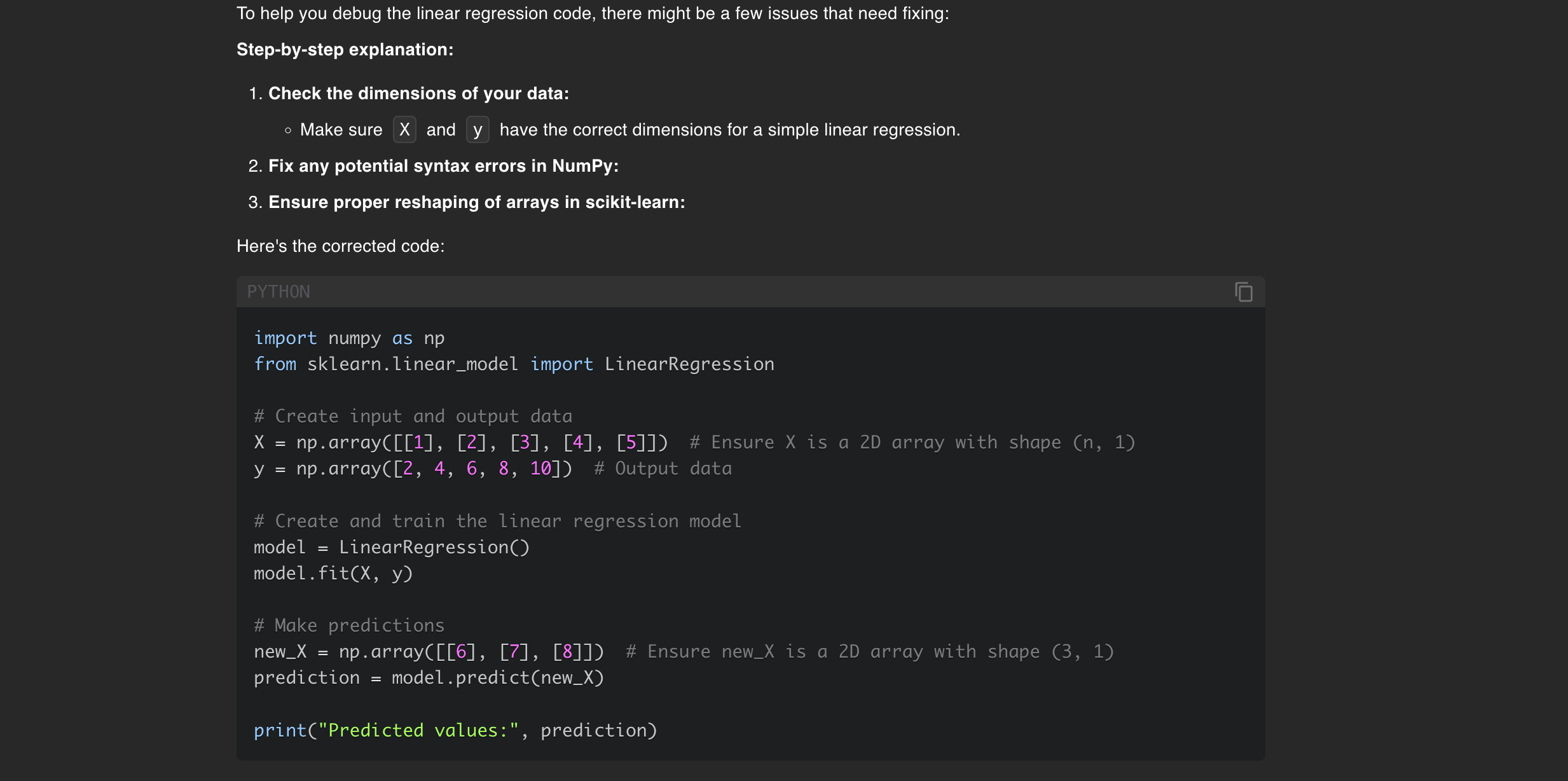

Debugging Code

One of the best uses I’ve found for running DeepSeek-R1 locally is how it helps with my AI projects. It’s especially useful because I often code on flights where I don’t have an internet connection, and I rely on LLMs a lot for debugging. To test how well it works, I gave it this code with a deliberately added silly mistake.

X = np.array([1, 2, 3, 4, 5]).reshape(-1, 1)

y = np.array([2, 4, 6, 8, 10]) model = LinearRegression()

model.fit(X, y)

new_X = np.array([6, 7, 8])

prediction = model.predict(new_X)

It handled the code effortlessly, but remember that I was running this on an M1 MacBook Air with just 8GB of Unified Memory. (Unified Memory is shared across the CPU, GPU, and other parts of the SoC.)

With an IDE open and several browser tabs running, my MacBook’s performance took a serious hit—I had to force quit everything to get it responsive again. If you have 16GB RAM or even a mid-tier GPU, you likely won’t run into these issues.

I also tested it with larger codebases, but it got stuck in a thinking loop, so I wouldn’t rely on it to fully replace more powerful models. That said, it’s still useful for quickly generating minor code snippets.

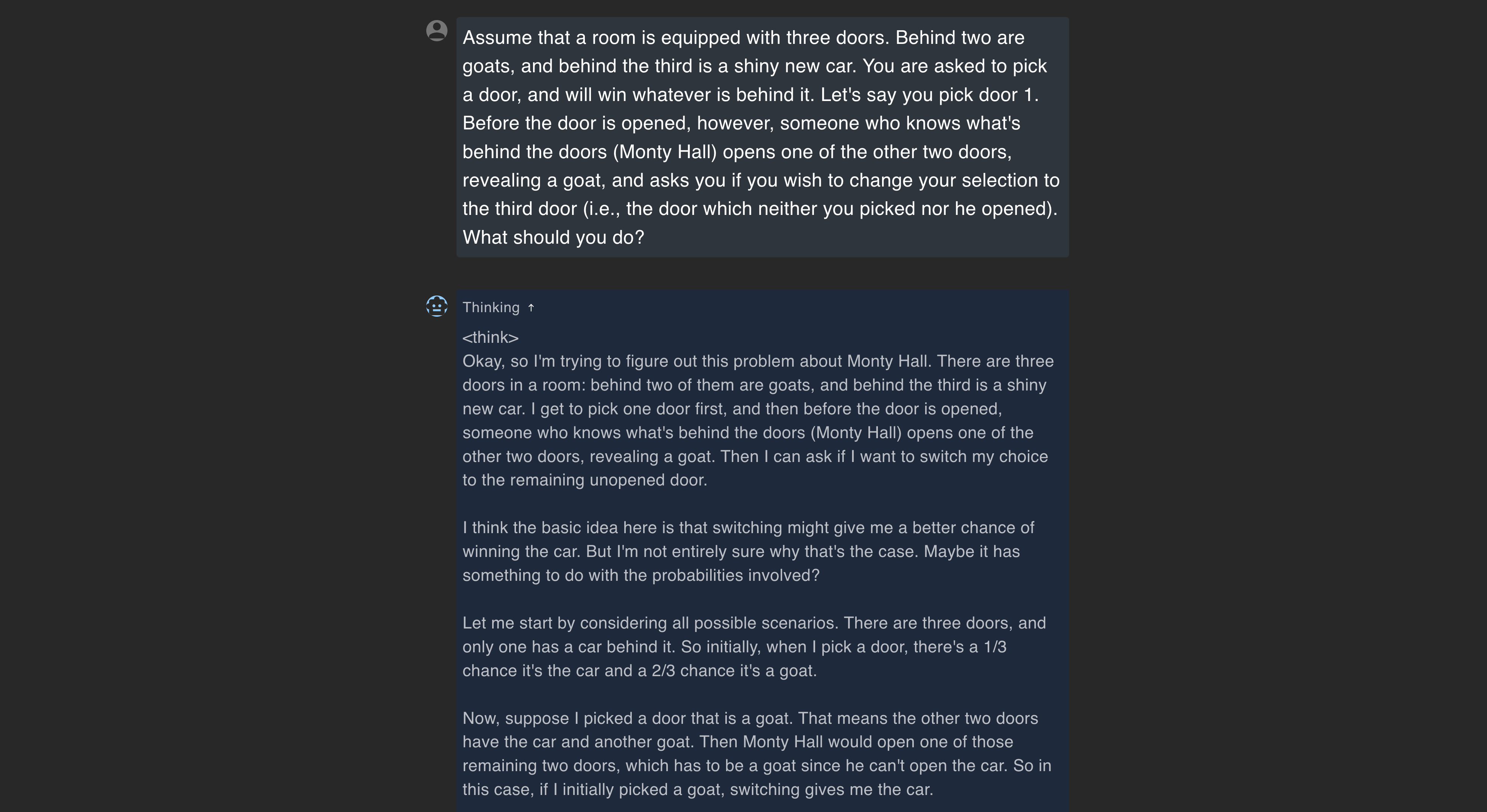

Solving Puzzles

I was also curious to see how well the model handles puzzles and logical reasoning, so I tested it with the Monty Hall problem, which it easily solved, but I really started to appreciate DeepSeek for another reason.

As shown in the screenshot, it doesn’t just give you the answer—it walks you through the entire thought process, explaining how it arrived at the solution. This clarifies that it’s reasoning through the problem rather than simply recalling a memorized answer from its training data.

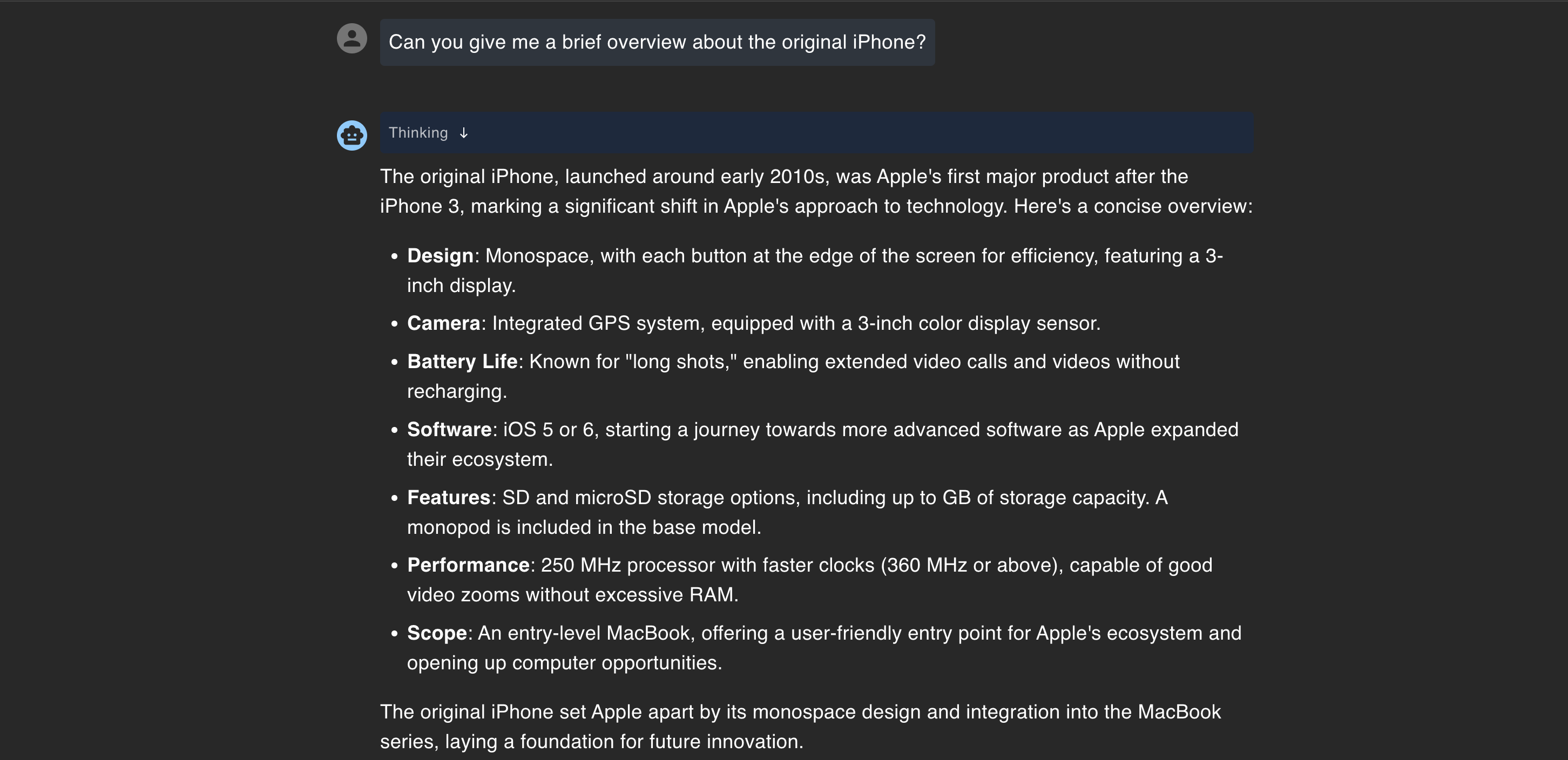

Research Work

One of the biggest drawbacks of running an LLM locally is its outdated knowledge cutoff. Since it can’t access the internet, finding reliable information on recent events can be challenging. This limitation was evident in my testing, but it became even worse when I asked for a brief overview of the original iPhone—it generated a response that was both inaccurate and unintentionally hilarious.

The first iPhone obviously didn’t launch with iOS 5, nor did it come after the nonexistent “iPhone 3.” It got almost everything wrong. I tested it with a few other basic questions, but the inaccuracies continued.

After DeepSeek suffered a data breach, it felt reassuring to know that I can run this model locally without worrying about my data being exposed. While it’s not perfect, having an offline AI assistant is a huge advantage. I’d love to see more models like this integrated into consumer devices like smartphones, especially after my disappointment with Apple Intelligence.