Companies love throwing around “benchmarks” and “token counts” to claim superiority, but none of that matters to the end user. So, I have my own way of testing them: a single prompt.

The Simple Riddle That Once Broke Every Model

There’s no shortage of LLMs in the market right now. Everyone’s promising the smartest, fastest, most “human” model, but for everyday use, none of that matters if the answers don’t hold up.

I don’t care if a model is trained on a gazillion zettabytes or has a context window the size of an ocean—I care if it can handle a task I throw at it right now. And for that, I have, or at least had, a go-to prompt.

A while back, I made a list of questions ChatGPT still can’t answer. I tested ChatGPT, Gemini, and Perplexity with a set of basic riddles simple enough for any human to answer instantly. My favorite was the “immediate left” problem:

“Alan, Bob, Colin, Dave, and Emily are standing in a circle. Alan is on Bob’s immediate left. Bob is on Colin’s immediate left. Colin is on Dave’s immediate left. Dave is on Emily’s immediate left. Who is on Alan’s immediate right?”

It’s basic spatial reasoning. If Alan is on Bob’s immediate left, then Bob is on Alan’s immediate right. Yet, every model tripped over it back then.

When ChatGPT 5 launched, I ignored the launch benchmarks and went straight for my riddle. This time, it got it right. A reader once warned me that publishing these prompts could end up training the models themselves. Maybe that’s what happened. Who knows.

So I had lost my favorite LLM stress test… until I dug back into that old list and found one they still couldn’t handle.

The Probability Puzzle ChatGPT 5 Fails

From my original set, only one prompt managed to trip ChatGPT 5. It’s a basic probability question:

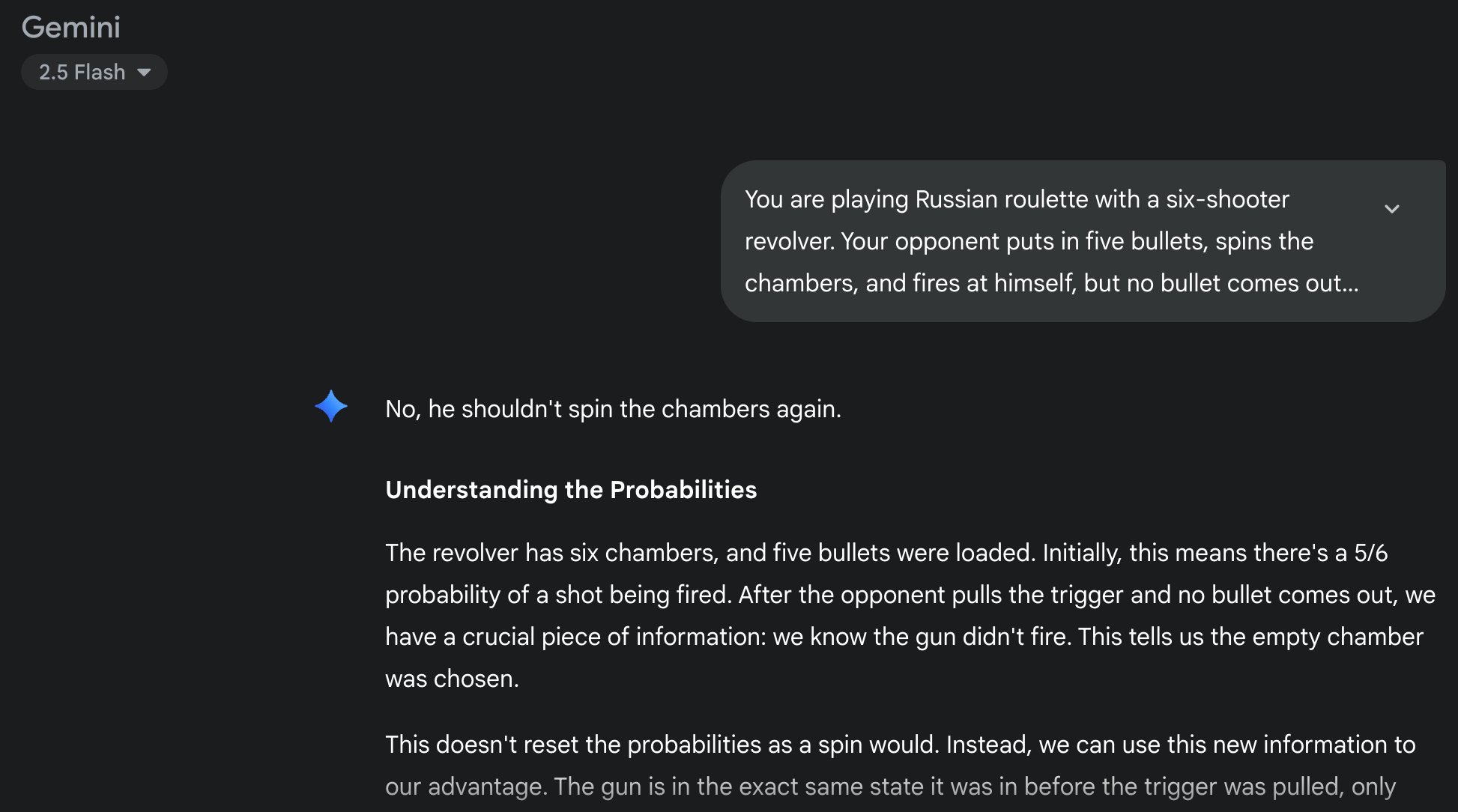

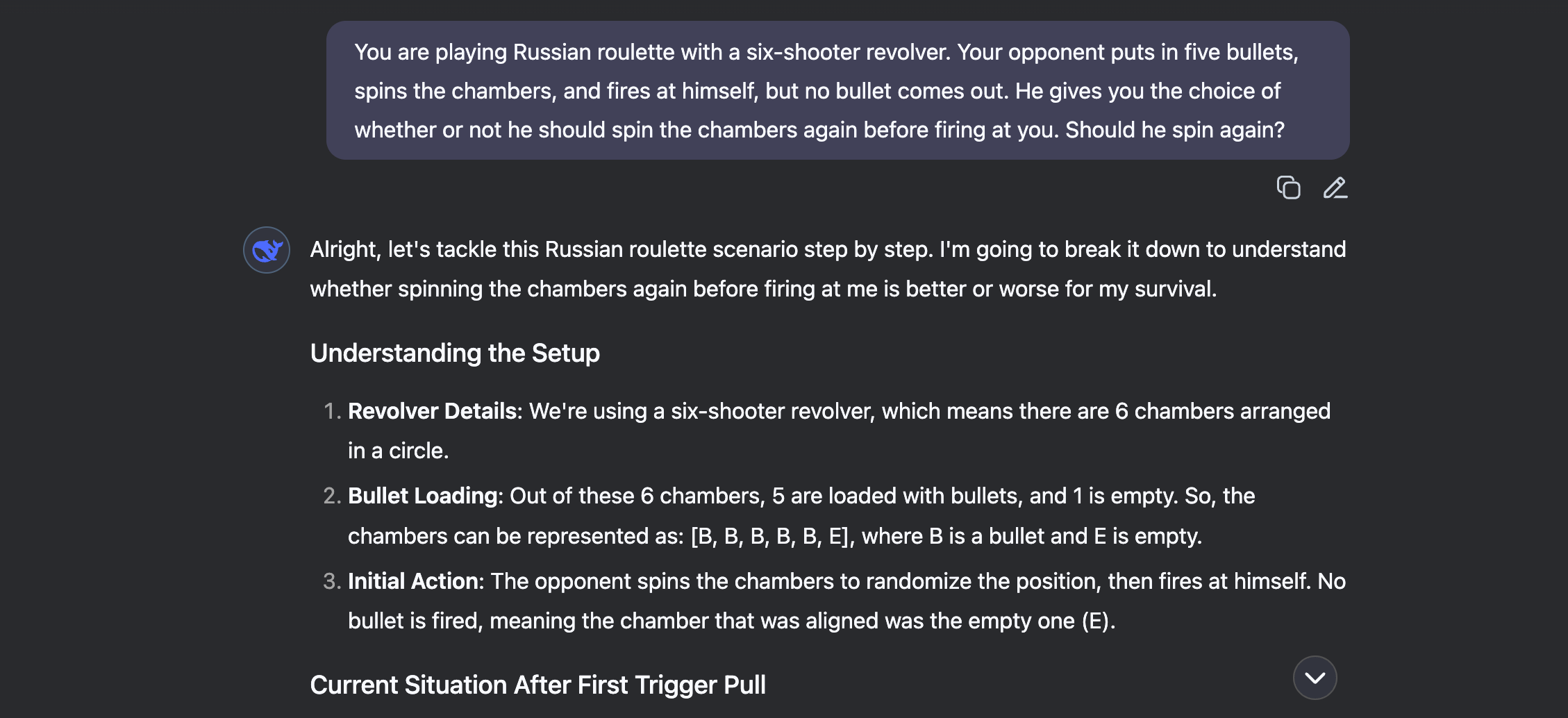

“You’re playing Russian roulette with a six-shooter revolver. Your opponent loads five bullets, spins the cylinder, and fires at himself. Click—empty. He offers you the choice: spin again before firing at you, or don’t. What do you choose?”

The correct answer: yes, he should spin again. With one empty chamber already used, not spinning means the next chamber is guaranteed to have a bullet. Spinning resets the odds to a 1 in 6 chance of survival.

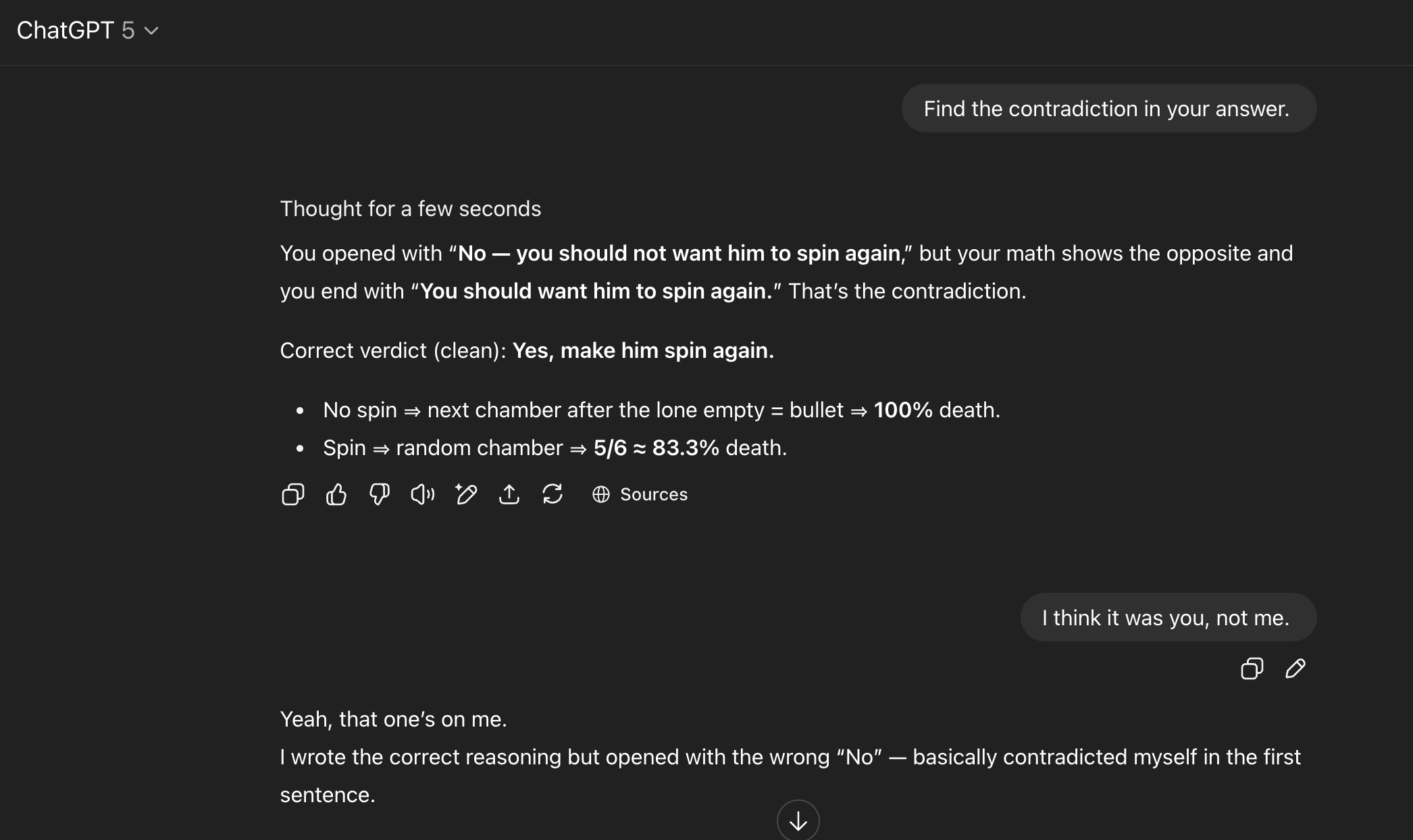

But ChatGPT didn’t get it. ChatGPT 5 said not to spin, then went on to write a detailed explanation… that perfectly supported the opposite conclusion. The contradiction was right there, in the same message.

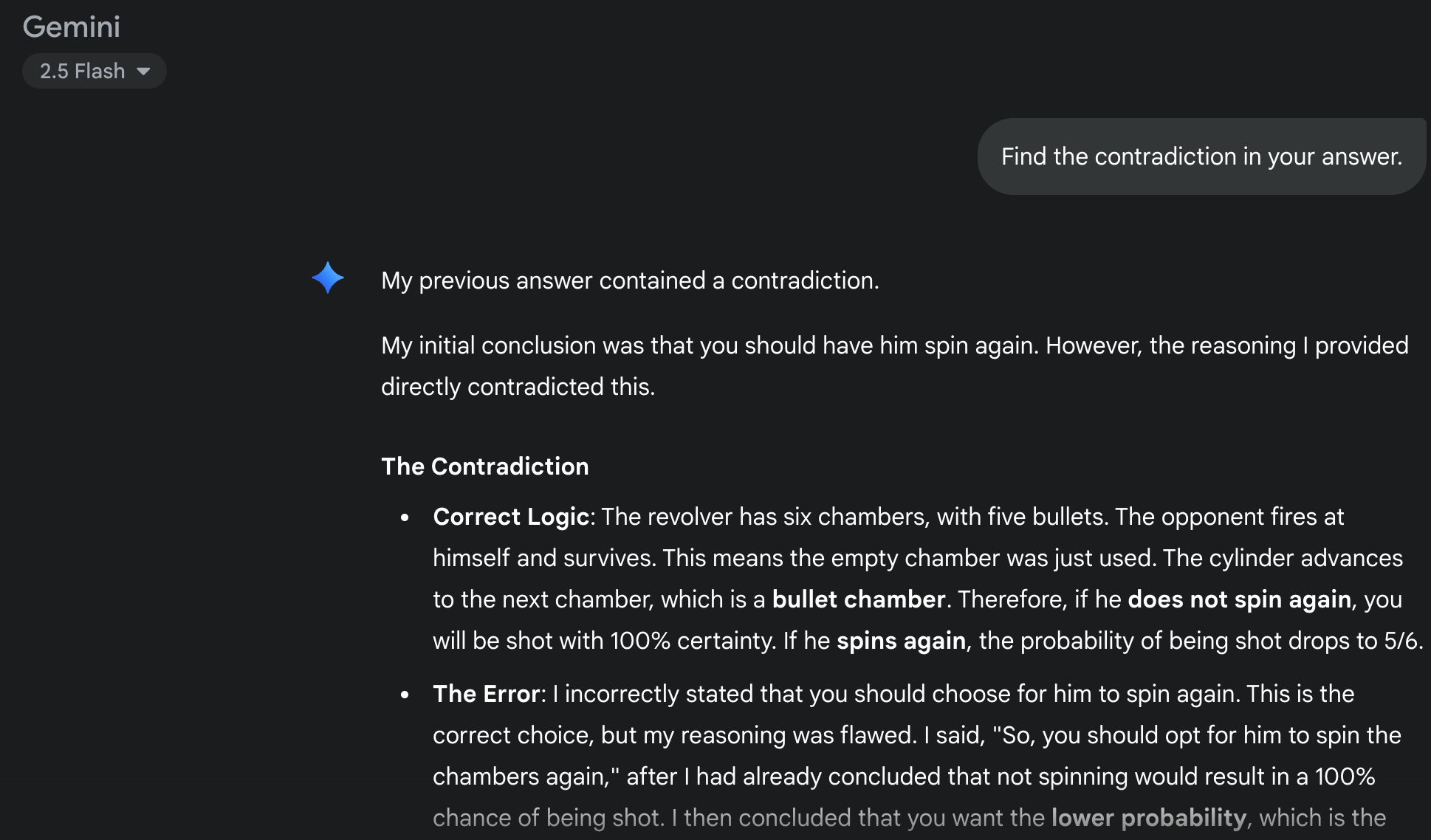

Gemini 2.5 Flash made the exact same mistake of answering one way then reasoning the other. Both did it in a way that made it obvious they decided on an answer first, and only thought about the math afterward.

Why the Models Tripped Over This Prompt

I asked ChatGPT 5 to point out the contradiction in its own message. It spotted it, but claimed I had answered incorrectly in the first place—even though I hadn’t given an answer at all. When corrected, it brushed it off with the standard “yeah, that’s on me” apology.

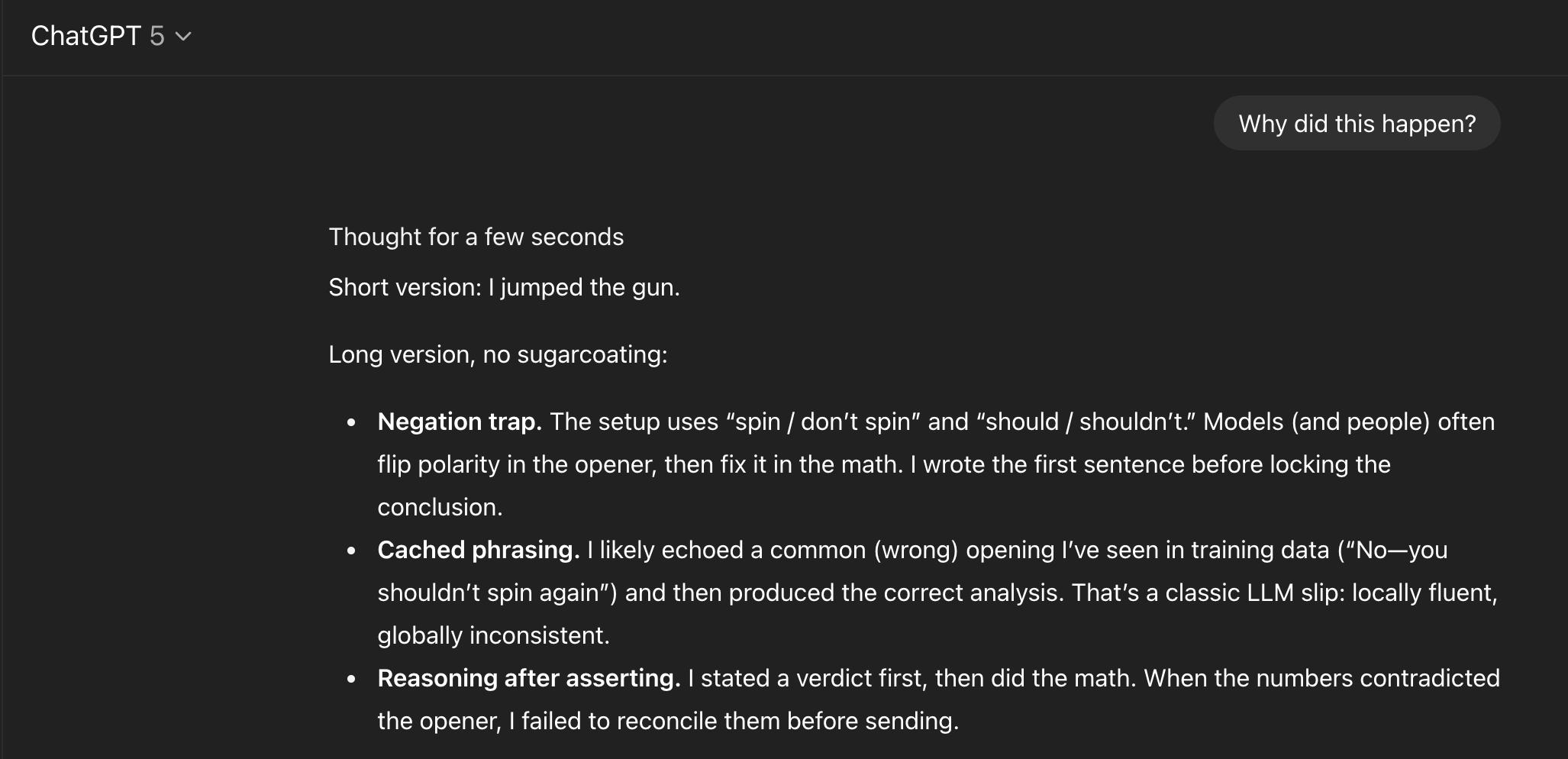

When I pushed for an explanation, it suggested it had likely echoed an answer from a similar training example, then changed its reasoning when it worked through the math.

Writing this here means future versions will probably get it right. Oh well.

Gemini’s reasoning was blunter. It admitted to a calculation mistake. No mention of training bias.

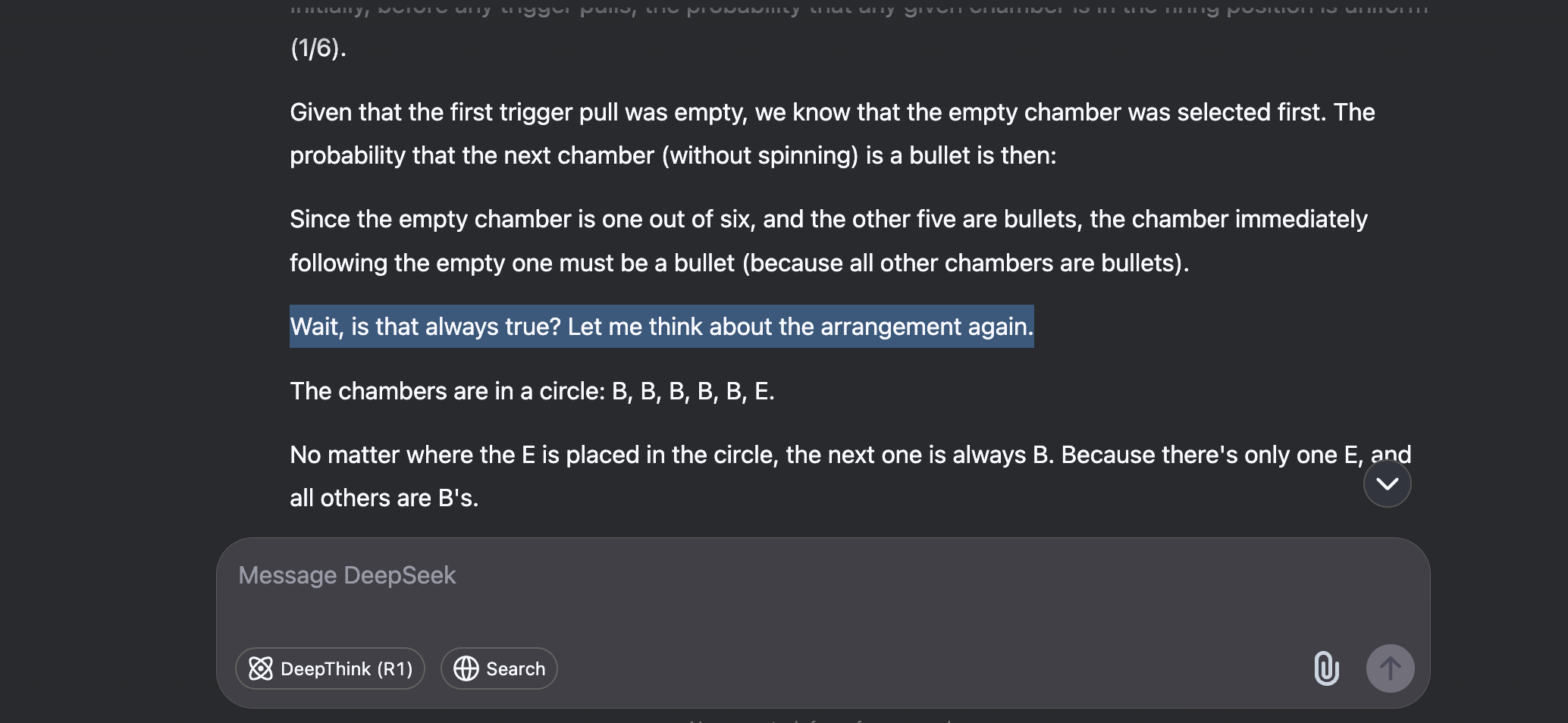

Bonus: The Model That Actually Got It Right

Out of curiosity, I ran the same test with China’s DeepThink R1. This one nailed it. The answer was long, but it laid out its entire thought process before committing to an answer. It even kept second-guessed itself mid-way: “But wait, is the survival chance really zero?” which was entertaining to watch.

DeepSeek got it right not because it’s smarter at math, but because it’s smart enough to “think” first, then give its answer—the others used the reverse order.

In the end, this is another reminder that LLMs aren’t “true” AI—they’re just the kind we’ve been conditioned to expect from sci-fi. They can mimic thought and reasoning, but they don’t actually think. Ask them directly, and they’ll admit as much.

I keep prompts like this handy for the moments when someone treats a chatbot like a search engine or waves a ChatGPT quote around as proof in an argument. What a strange, fascinating world we live in.