Whether you’re a professional writer or a student who frequently writes essays, you’re probably tired of running your work through AI detectors just for it to be flagged as 100% AI-generated. Fortunately, AI checkers aren’t always reliable, and these five examples illustrate why.

1 A Paragraph I Just Asked AI to Write

If AI checkers were 100% accurate, content produced by ChatGPT should be flagged as 100% AI-generated, right?

So, I decided to put exactly this to the test. It took a few attempts, and I’ll give credit where it’s due: a lot of the time, ChatGPT’s content was flagged as 70% to 100% AI-generated. However, as I expected, after a few tries, ChatGPT-generated text appeared as 100% human-written.

For instance, I asked ChatGPT to write me a conversational paragraph about the iPhone 15 Pro’s cameras. I ran the response it sent me through ZeroGPT, which claims to be the most advanced and reliable ChatGPT, GPT4, and AI content detector.

Sure enough, just as I expected, ZeroGPT reported that the text was 100% human-written. I’ve attached a screenshot below—on one side of the screen, you’ll see me asking ChatGPT to write the paragraph about iPhone 15 Pro’s cameras, and on the other side, the same exact response is being pasted into the AI detector.

Think about it: if content produced by AI can be flagged as human-written, can you really trust the results when the situation is reversed?

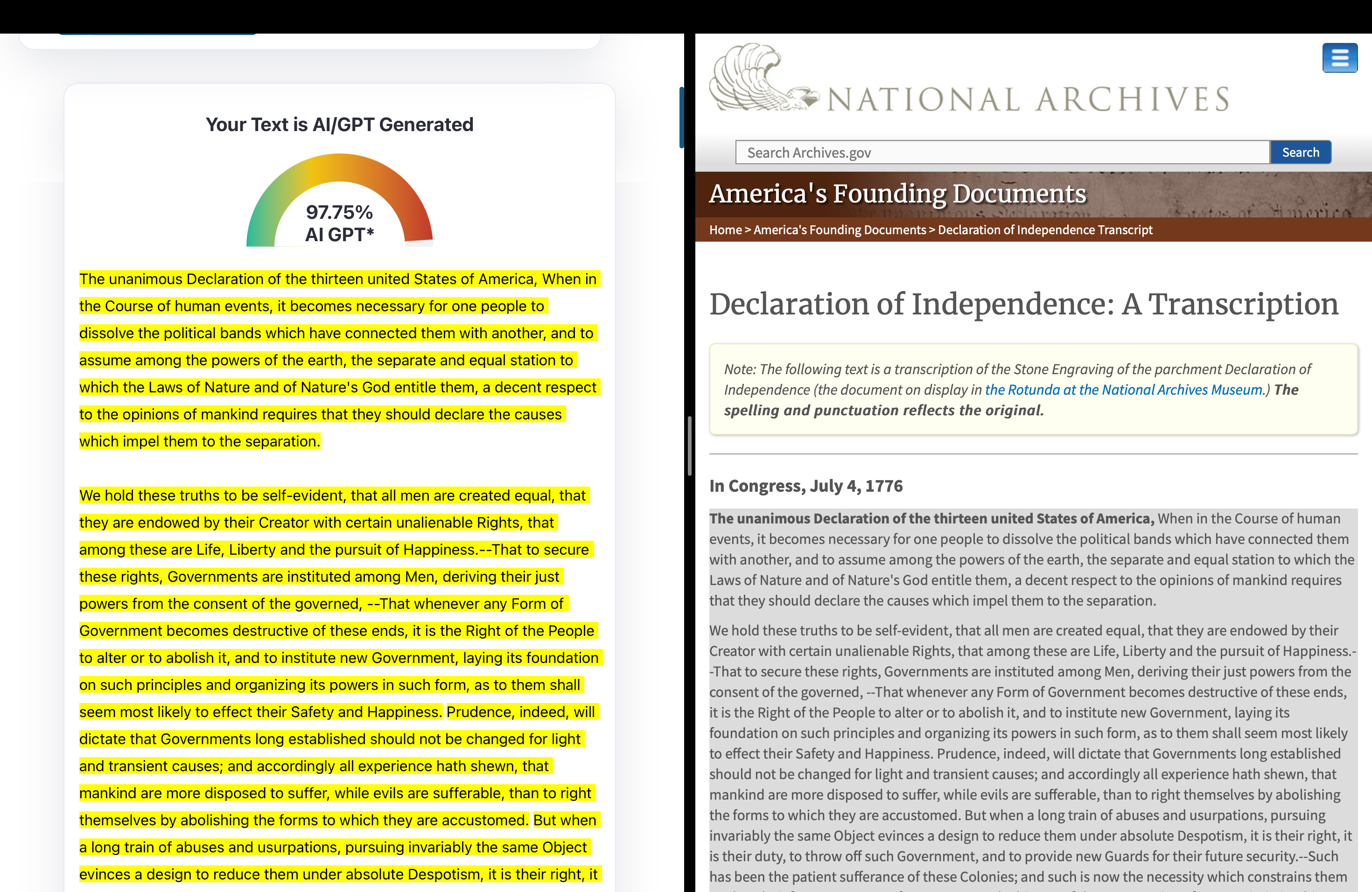

2 The US Declaration of Independence

The US Declaration of Independence was adopted by the Continental Congress on July 4, 1776, which means it was written almost 250 years ago.

Unless Thomas Jefferson, who drafted the document, had time-traveling abilities, there’s simply no way AI could have written the US Declaration of Independence. Interestingly, ZeroGPT thinks otherwise and suggests that AI-generated 97.75% of the entire document. Make it make sense!

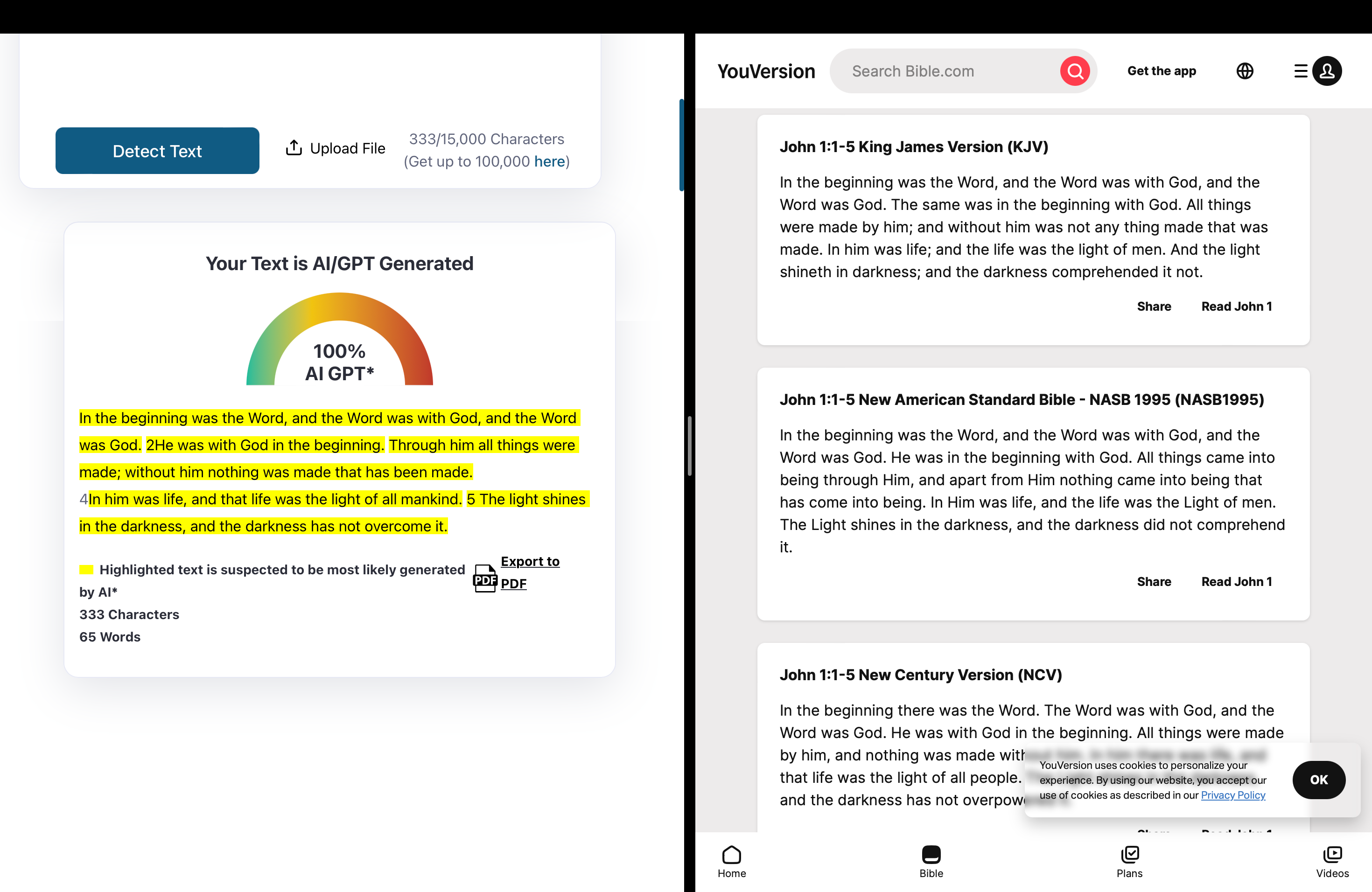

3 The Bible

The Hebrew Bible was completed around 100 CE, predating the Declaration of Independence by about 1,676 years. AI detectors, like ZeroGPT, often flag well-known literature and historical texts as AI-generated because such works influence AI models.

Regardless, the fact that inputting text from the Bible into ZeroGPT triggers a 100% AI-generated warning demonstrates that content detectors cannot be fully trusted.

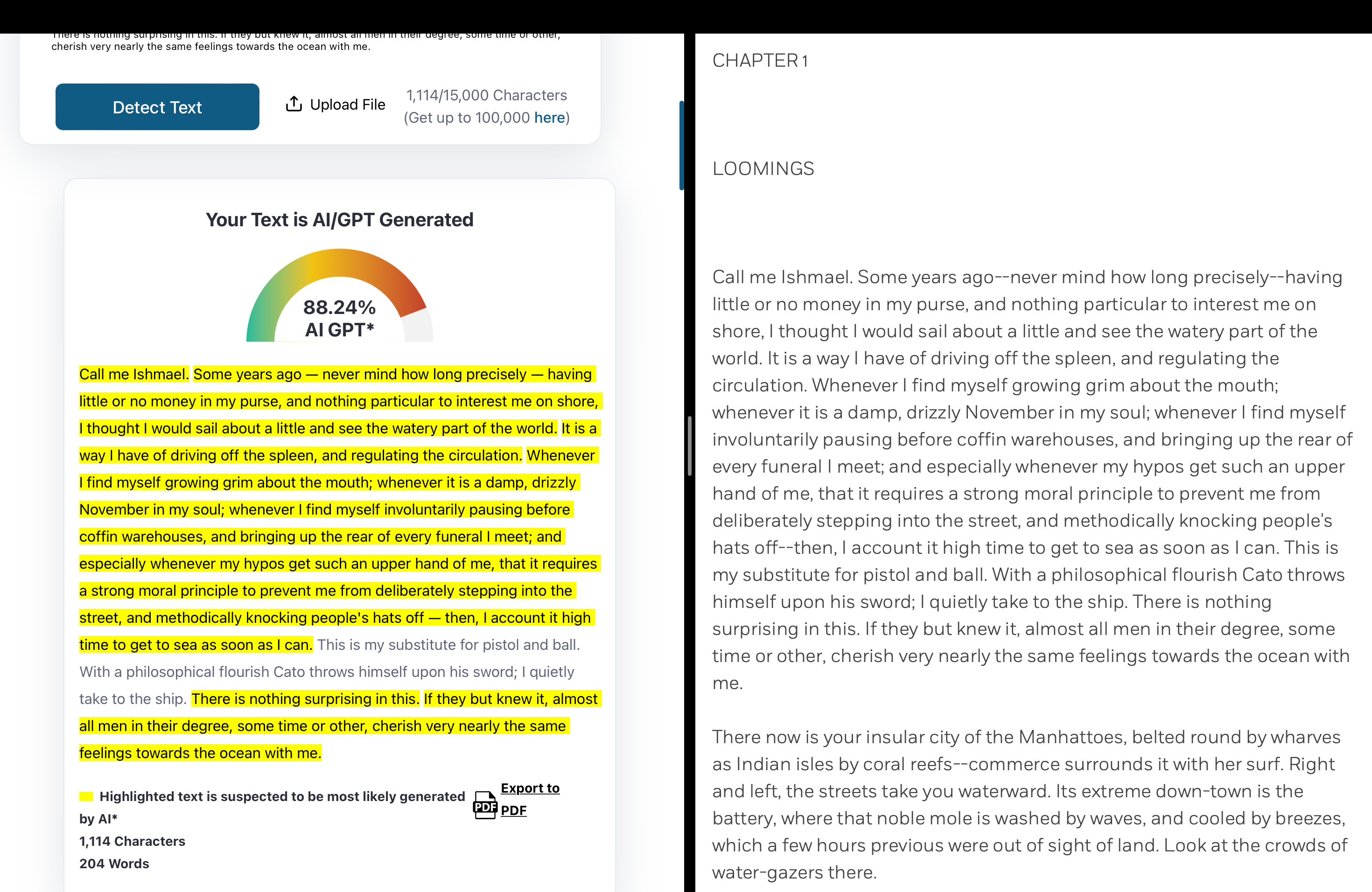

4 Moby Dick

Moby-Dick, written by Herman Melville about his experiences at sea, was first published in 1851—long before the advent of AI. I ran an excerpt from the famous first chapter through ZeroGPT, and it was flagged as 88.24% written by AI.

While I wasn’t particularly surprised by this result, given that AI models are influenced by classical literature like this, well-known text being misclassified as AI still highlights a significant issue and goes to show just how unreliable content detectors are.

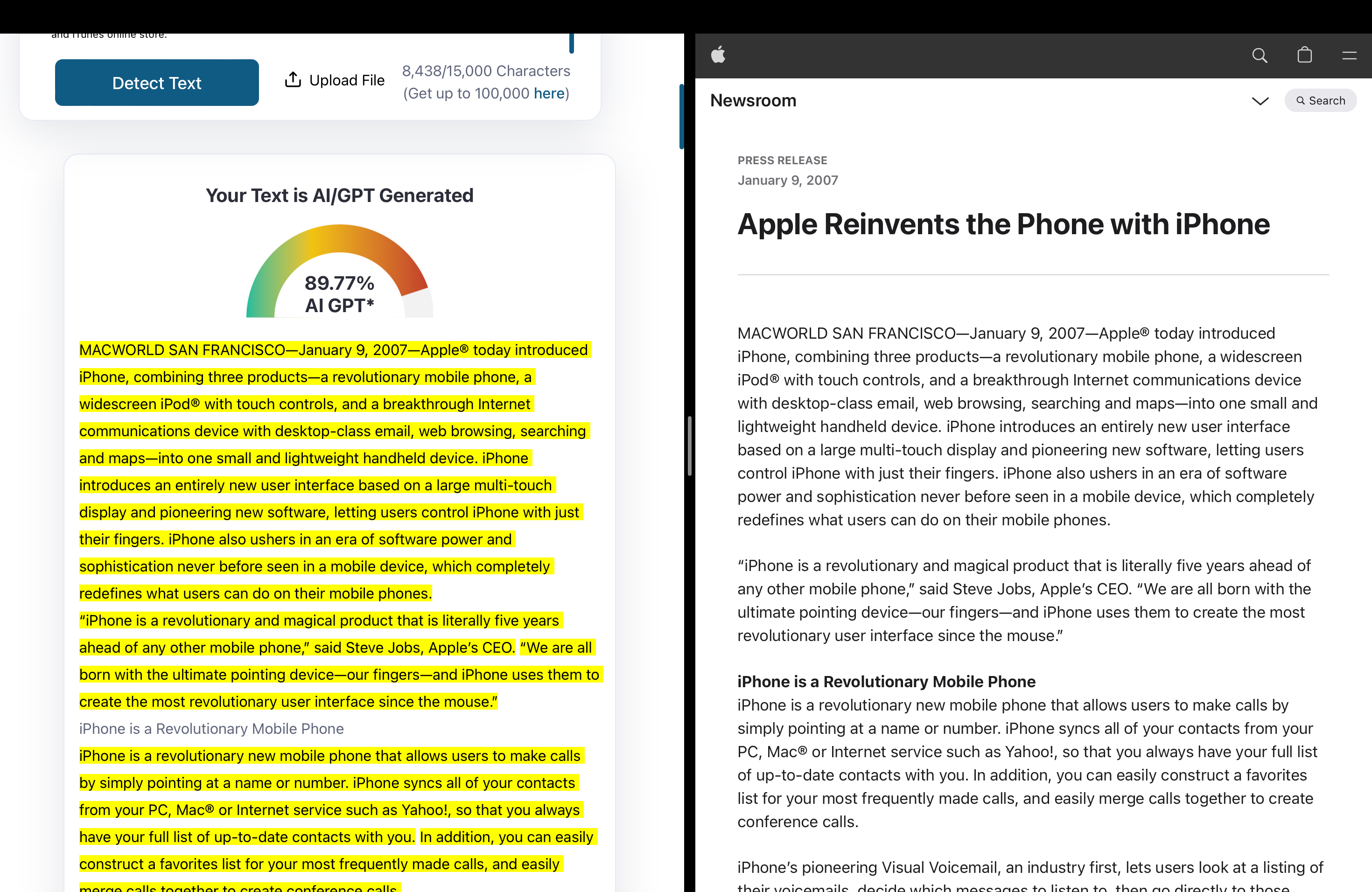

5 Apple’s First Ever iPhone Press Release

Sure, AI models are trained on a wide range of text, which includes classical literature like Shakespeare and Moby Dick. While this could explain why Moby Dick could be mistakenly identified as AI when you run it through a content detector, I highly doubt a press release by Apple would’ve been used to train an AI model.

Instead of running a newer press release by Apple through a detector, I dug through Apple’s Newsroom archives and came across Apple’s first-ever press release about the iPhone. I pasted the entire press release to ZeroGPT as is, and it was detected as 89.77% AI-generated.

Other than headings and a paragraph or two, the rest of the text was highlighted as AI.

All in all, there are a ton of AI text detectors out there that you can try to run out tests with. However, the bottom line is that AI content detectors don’t work. While this ultimately means that spotting AI-generated text is even more challenging, I believe that text written entirely by AI can still be recognized just by reading it carefully.