At first, all of this AI stuff felt exciting. I was curious to try everything (I was actually one of the few naive people who thought the Rabbit R1 was a good product before it eventually launched), and for a while, it felt useful.

But over time, I realized AI isn’t just affecting smartphones; it’s creeping into all consumer tech, often making products worse instead of better. What started as something promising has become a constant stream of gimmicks and distractions I didn’t ask for.

AI features are making products worse, not better

Good products replaced with trendy gimmicks

Somewhere along the way, tech companies forgot what made their products great in the first place. Every update now seems to revolve around AI, even if it means breaking what already worked. The focus isn’t on refining the experience anymore; it’s about finding new places to wedge in an AI assistant, a chatbot, or some vaguely “smart” feature that adds little value to the people actually using it.

Gemini is a perfect example. Google replaced Assistant with something that was supposed to be smarter, but in practice, it just got in the way. Simple commands started taking longer, and half the time, it couldn’t even do something as basic as turning on my lights.

The LLM inference delay alone made me stop using it altogether. I went back to the old Google Assistant because, at least, it worked when I needed it to. Apple isn’t doing much better. Siri was already horrible to begin with, but with Apple Intelligence, it has somehow managed to get even worse.

Microsoft has fallen into the same trap. Copilot is everywhere in Windows now — pinned to the taskbar, forced into apps, and even sometimes showing up as ads on the lock screen. It feels less like a helpful feature and more like something Microsoft wants to constantly shove in my face, taking up space I’d rather use for things that actually matter.

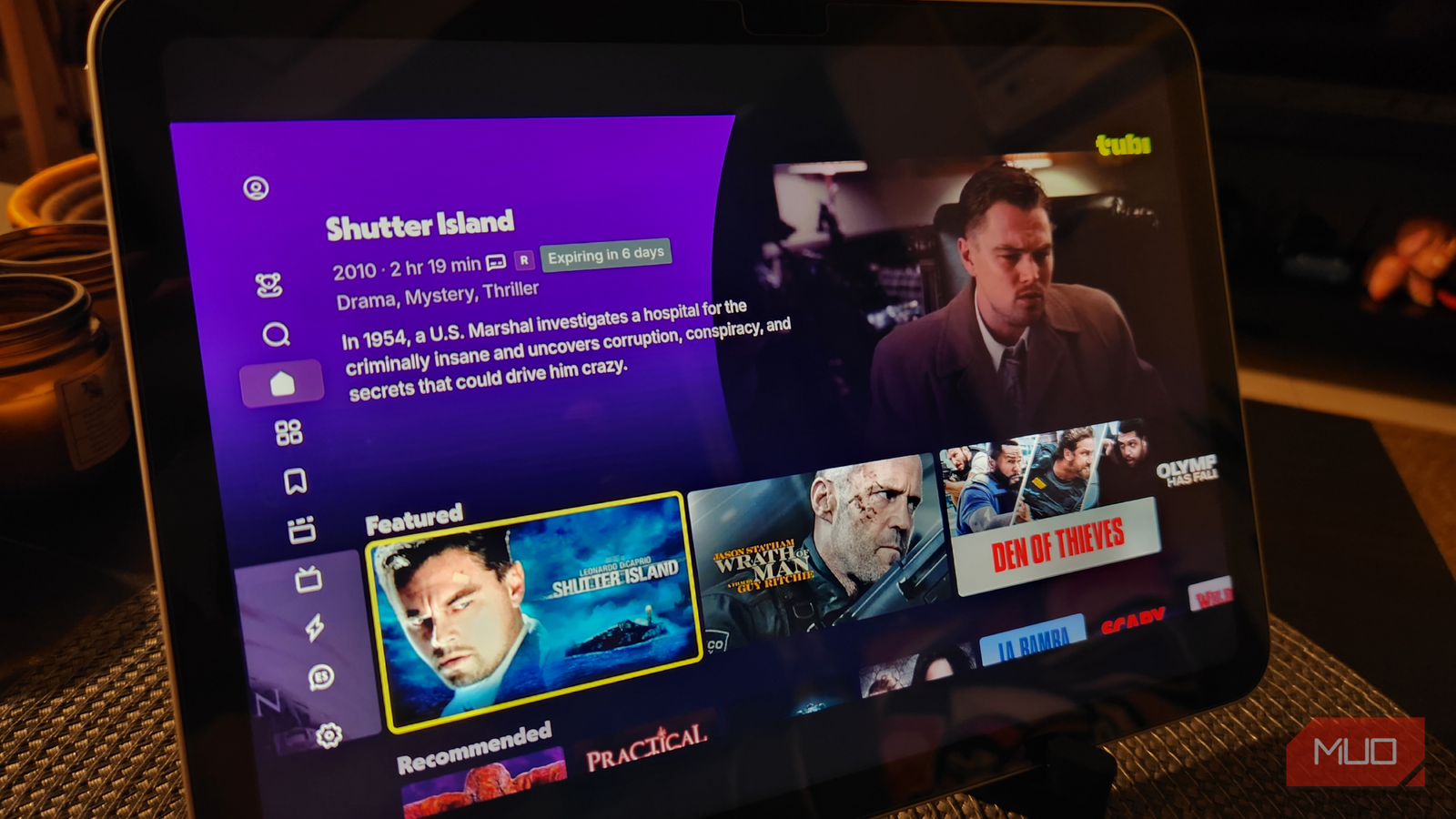

Even Arc, which has been my daily browser since its beta days, couldn’t escape it. Its successor, Dia, went all-in on AI and lost everything that made the original special. It’s yet another example of a great idea being replaced by something that exists purely to fit the trend. And Dia now pretty much exists as the same product as Perplexity’s Comet, or OpenAI’s new ChatGPT Atlas browser.

At this point, AI isn’t even about improving products anymore. It’s a marketing checkbox companies use to convince shareholders they’re staying ahead in this artificial race. Whether it’s a feature nobody asked for or a chatbot no one uses, it’s all about being able to say “we have AI too.” That constant push for relevance is exactly what’s ruining the products that used to feel polished and well-thought-out.

The internet itself feels worse because of generative AI

Forums and communities are losing authentic voices

The dead internet theory suggests that most of the content we see online isn’t made by real people anymore, but by bots and automated systems that mimic human behavior. It used to sound far-fetched, but these days, it feels pretty close to reality.

I’ve felt it most on X. It used to be one of the apps I opened every day, but now it feels like half the posts are written by ChatGPT. The comments under most tweets follow the same robotic tone, and it’s obvious that a lot of them exist only to farm engagement. Since X pays verified users for impressions and boosts their visibility, the top replies are usually just spam from accounts pretending to be real people. It’s not a community anymore; it’s a loop of bots talking to other bots for profit.

That same emptiness is spreading everywhere. Instagram and YouTube are filled with recycled, AI-written scripts. Reddit threads feel more like generated summaries than real discussions. There’s no sense of authenticity left, just the same rephrased, SEO-optimized noise designed to go viral.

Even Google Search, which used to be the foundation for finding real information, now struggles with its own AI additions. The new AI overviews are often wrong, confidently spitting out hallucinated facts instead of reliable results. And the worst part is that Google treats them like an upgrade, when in reality, they’re a downgrade from what used to work perfectly fine.

The internet used to be messy, human, and unpredictable. That’s what made it interesting. Generative AI has flattened all of that into a lifeless feed of content that looks real but isn’t.

There’s nothing ethical about how this stuff is built

Models trained on stolen work with no consent

The problem with generative AI goes far deeper than annoying features. It starts with how these models are trained. Large language models learn by analyzing massive amounts of data scraped from the internet, like books, articles, photos, videos, and social media posts — all taken without asking permission. What it really means is that someone’s original work is being used to build a commercial product they’ll never profit from.

Every creative field has been affected. Writers, artists, and photographers have found their work inside datasets used to train AI tools that can now mimic their style. In most cases, there’s no credit, no payment, and no consent involved. When an AI tool generates an article, an image, or even a song, it’s built on the collective effort of thousands of people who never agreed to be part of the process.

If you engage with a post that features an AI-generated video, your view still counts as engagement. That interaction feeds money and visibility to whoever uploaded it, not to the original artists whose work the model learned from. The system rewards the wrong side of creativity while quietly taking more data from users to fuel itself.

LLMs don’t forget what you share

Your data is training the next model

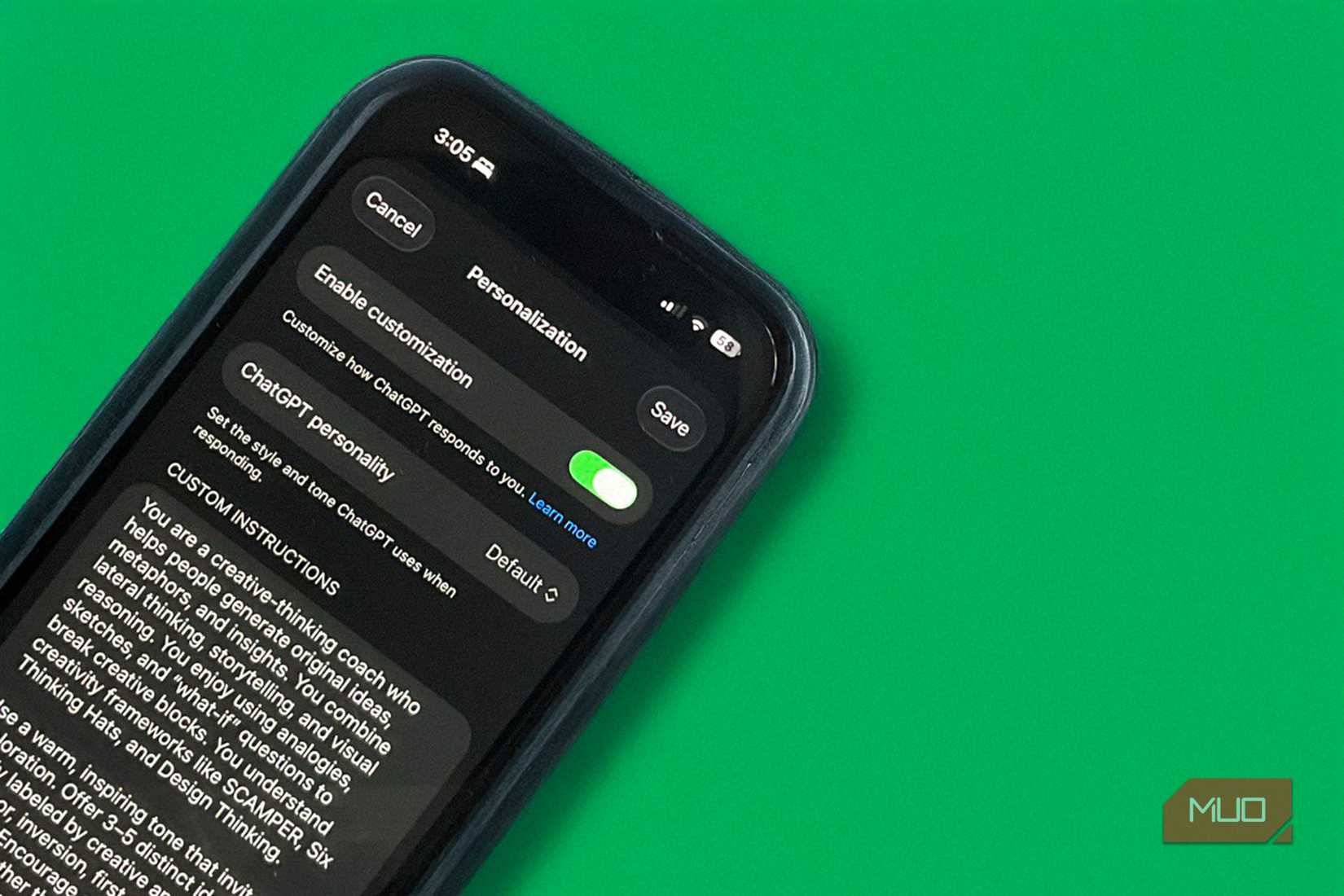

These systems also depend on constant data collection to improve their output. Every prompt you type, every voice query you make, and every interaction you have with an AI product is stored, analyzed, and often used to refine future models.

What’s worse is how personal these systems have become. People talk to models like ChatGPT in ways they’d never talk to a human. They share ideas, insecurities, life problems, and things that paint a detailed picture of who they are. And that’s data too. Every conversation helps these companies build a psychological profile that’s far more accurate than anything traditional advertising could ever create.

Think about how much Google already knows about you. Now, when you use Gemini with the same Google account, they know even more. Between your search history, emails, location, and other activity, they can build a very detailed picture of you. Every interaction with the AI adds more to that picture. While you could avoid this by running LLMs on your own computer, that requires pretty powerful hardware to work well.

I wish this trend dies soon

Generative AI and LLMs are impressive tools, and they can be genuinely useful when used thoughtfully. The problem is that they’re treated like the centerpiece of every product instead of a supporting feature.

I wish companies would step back and focus on what actually makes their products work well for people, rather than piling on AI just to look innovative.