Key Takeaways

- Large companies like Open AI, Google, Microsoft, and Meta are investing in SLMs.

- SLMs are gaining popularity across the industry and are better positioned as the future AI.

- Examples of SLMs include Google Nano, Microsoft’s Phi-3, and Open AI’s GPT-4o mini.

Large language models (LLMs) hit the scene with the release of Open AI’s ChatGPT. Since then, several companies have also launched their LLMs, but more companies are now leaning towards small language models (SLMs).

SLMs are gaining momentum, but what are they, and how do they differ from LLMs?

What Is a Small Language Model?

A small language model (SLM) is a type of artificial intelligence model with fewer parameters (think of this as a value in the model learned during training). Like their larger counterparts, SLMs can generate text and perform other tasks. However, SLMs use fewer datasets for training, have fewer parameters, and require less computational power to train and run.

SLMs focus on key functionalities, and their small footprint means they can be deployed on different devices, including those that don’t have high-end hardware like mobile devices. For example, Google’s Nano is an on-device SLM built from the ground up that runs on mobile devices. Because of its small size, Nano can run locally with or without network connectivity, according to the company.

Besides Nano, there are many other SLMs from leading and upcoming companies in the AI space. Some popular SLMs include Microsoft’s Phi-3, OpenAI’s GPT-4o mini, Anthropic’s Claude 3 Haiku, Meta’s Llama 3, and Mistral AI’s Mixtral 8x7B.

Other options are also available, which you might think are LLMs but are SLMs. This is especially true considering most companies are taking the multi-model approach of releasing more than one language model in their portfolio, offering both LLMs and SLMs. One example is GPT-4, which has various models, including GPT-4, GPT-4o (Omni), and GPT-4o mini.

Small Language Models vs. Large Language Models

While discussing SLMs, we can’t ignore their big counterparts: LLMs. The key difference between an SLM and an LLM is the model size, which is measured in terms of parameters.

As of this writing, there’s no consensus in the AI industry on the maximum number of parameters a model should not exceed to be considered an SLM or the minimum number required to be considered an LLM. However, SLMs typically have millions to a few billions of parameters, while LLMs have more, going as high as trillions.

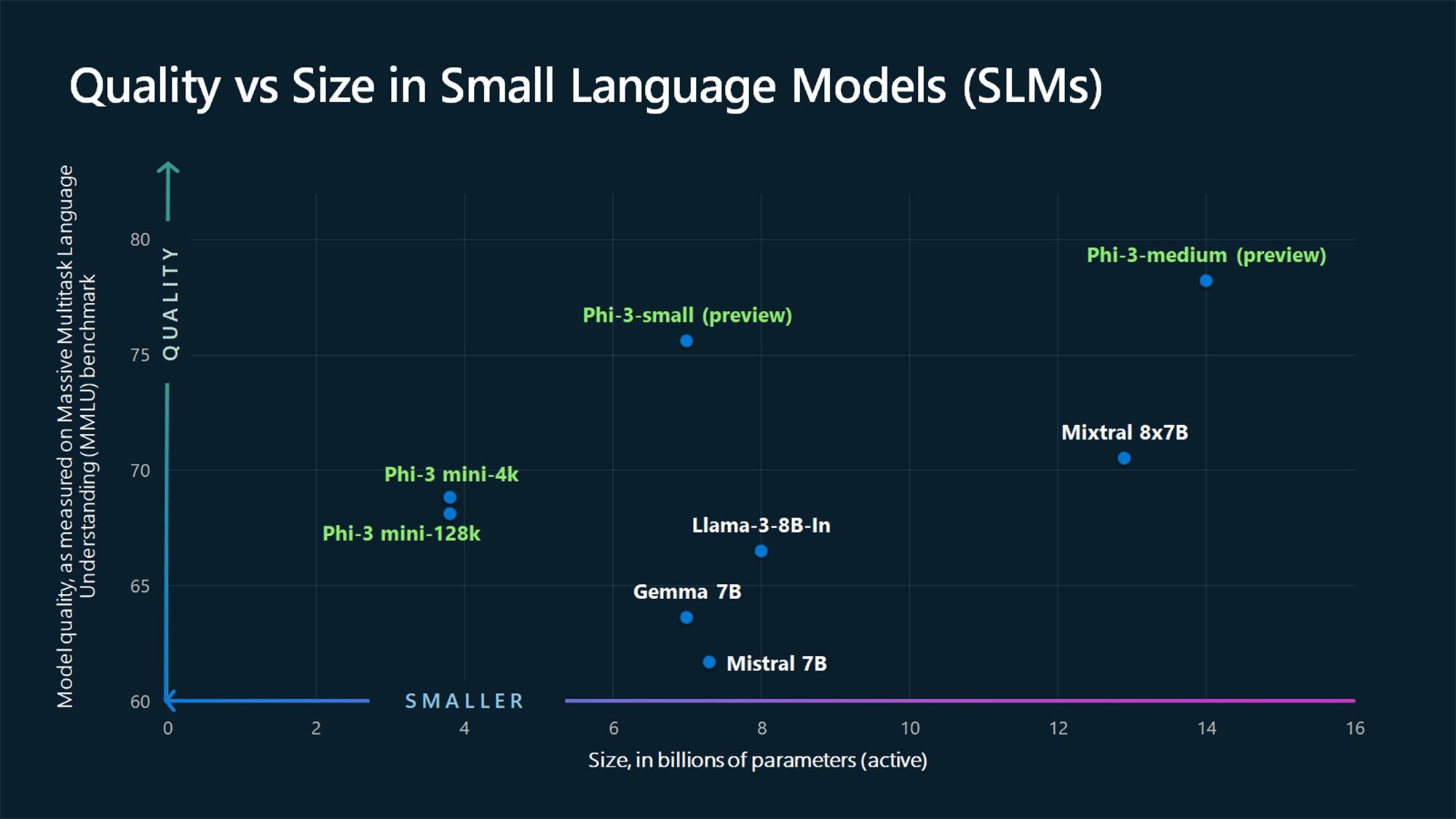

For example, GPT-3, which was released in 2020, has 175 billion parameters (and the GPT-4 model is rumored to have around 1.76 trillion), while Microsoft’s 2024 Phi-3-mini, Phi-3-small, and Phi-3-medium SLMs measure 3.8, 7, and 14 billion parameters, respectively.

Another differentiating factor between SLMs and LLMs is the amount of data used for training. SLMs are trained on smaller amounts of data, while LLMs use large datasets. This difference also affects the model’s capability to solve complex tasks.

Due to the large data used in training, LLMs are better suited for solving different types of complex tasks that require advanced reasoning, while SLMs are better suited for simpler tasks. Unlike LLMs, SLMs use less training data, but the data used must be of higher quality to achieve many of the capabilities found in LLMs in a tiny package.

Why Small Language Models Are the Future

For most use cases, SLMs are better positioned to become the mainstream models used by companies and consumers to perform a wide variety of tasks. Sure, LLMs have their advantages and are more suited for certain use cases, such as solving complex tasks. However, SLMs are the future for most use cases due to the following reasons.

1. Lower Training and Maintenance Cost

SLMs need less data for training than LLMs, which makes them the most viable option for individuals and small to medium companies with limited training data, finances, or both. LLMs require large amounts of training data and, by extension, need huge computational resources to both train and run.

To put this into perspective, OpenAI’s CEO, Sam Altman, confirmed it took them more than $100 million to train GPT-4 while speaking at an event at MIT (as per Wired). Another example is Meta’s OPT-175B LLM. Meta says it was trained using 992 NVIDIA A100 80GB GPUs, which cost roughly $10,000 per unit, as per CNBC. That puts the cost at approximately $9 million, without including other expenses like energy, salaries, and more.

With such figures, it’s not viable for small and medium companies to train an LLM. In contrast, SLMs have a lower barrier to entry resource-wise and cost less to run, and thus, more companies will embrace them.

2. Better Performance

Performance is another area where SLMs beat LLMs due to their compact size. SLMs have less latency and are more suited for scenarios where faster responses are needed, like in real-time applications. For example, a quicker response is preferred in voice response systems like digital assistants.

Running on-device (more on this later) also means your request doesn’t have to make a trip to online servers and back to respond to your query, leading to faster responses.

3. More Accurate

When it comes to generative AI, one thing remains constant: garbage in, garbage out. Current LLMs have been trained using large datasets of raw internet data. Thus, they might not be accurate in all situations. This is one of the problems with ChatGPT and similar models and why you shouldn’t trust everything an AI chatbot says. On the other hand, SLMs are trained using higher-quality data than LLMs and thus have higher accuracy.

SLMs can also be fine-tuned further with focused training on specific tasks or domains, leading to better accuracy in those areas compared to larger, more generalized models.

4. Can Run On-Device

SLMs need less computational power than LLMs and thus are ideal for edge computing cases. They can be deployed on edge devices like smartphones and autonomous vehicles, which don’t have large computational power or resources. Google’s Nano model can run on-device, allowing it to work even when you don’t have an active internet connection.

This ability presents a win-win situation for both companies and consumers. First, it’s a win for privacy as user data is processed locally rather than sent to the cloud, which is important as more AI is integrated into our smartphones, containing nearly every detail about us. It is also a win for companies as they don’t need to deploy and run large servers to handle AI tasks.

SLMs are gaining momentum, with the largest industry players, such as Open AI, Google, Microsoft, Anthropic, and Meta, releasing such models. These models are more suited for simpler tasks, which is what most of us use LLMs for; hence, they are the future.

But LLMs aren’t going anywhere. Instead, they will be used for advanced applications that combine information across different domains to create something new, like in medical research.