ChatGPT-4.5 was marketed as a groundbreaking revelation, but I wasn’t impressed. OpenAI’s latest AI model has several areas where it needs improvement, and it failed to deliver on what we were promised.

7

More Emotional Intelligence, but Not Much

One main point of GPT-4.5 was that it would have better emotional intelligence. And while it’s slightly better than the previous version, I don’t think it’s significant enough.

When I have conversations in GPT-4.5, I still don’t feel like it’s entirely natural. While it’s possible to use ChatGPT to develop emotional intelligence, I’m still on the fence about whether AI can do the same.

I’m sure the model will improve as it receives more information, but I’m not impressed with the current version’s EQ. To improve, the app needs to spend more time processing and listening to prompts; it feels like it jumps at conclusions right now.

6

GPT-4.5’s Explanations Are Fluffy

This version of ChatGPT is supposed to provide more reasoning in conversations, which was previously a big blind spot. Perplexity has always been better at ChatGPT in this area, which is one of many reasons why Perplexity is so good.

However, having used GPT-4.5, I’m still not convinced that its reasoning is anywhere near Perplexity. Most of the time, I feel like the explanations are needless fluff. They are just words for the sake of words, which is more annoying than having no reasoning at all.

I’m not alone in my thoughts here, either. Some users have encountered issues where the software adds the same words repeatedly. The simple way to improve would be for ChatGPT to explain and then ask follow-up questions; its Deep Research tool is very good at this.

5

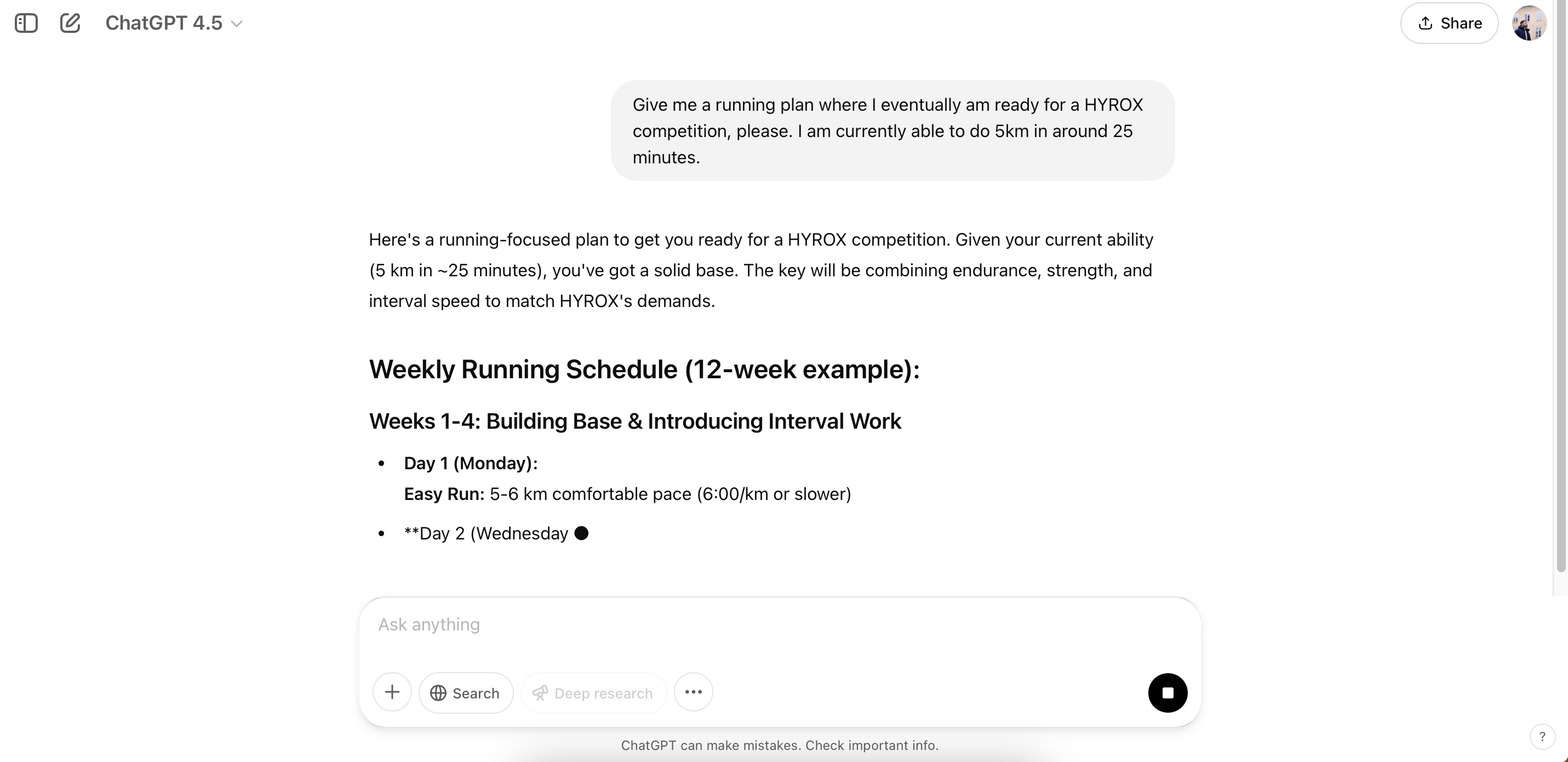

Ignoring My Requests

Since I started using ChatGPT, I’ve had problems with the app not fully listening to what I say. I had hoped these issues would become less prevalent in GPT-4.5, but unfortunately, that doesn’t seem to be the case.

When using this version, I feel like the AI glosses over the main points. As a result, I’m left with basic responses that miss the point of what I was trying to achieve. I then need to reword my prompts, which quickly becomes frustrating.

To fix this, I think GPT-4.5 should critically analyze every part of my text. Admittedly, I could be more tactful here and use some prompting tips and tricks. For example, I could specifically tell ChatGPT which areas it should focus on the most.

4

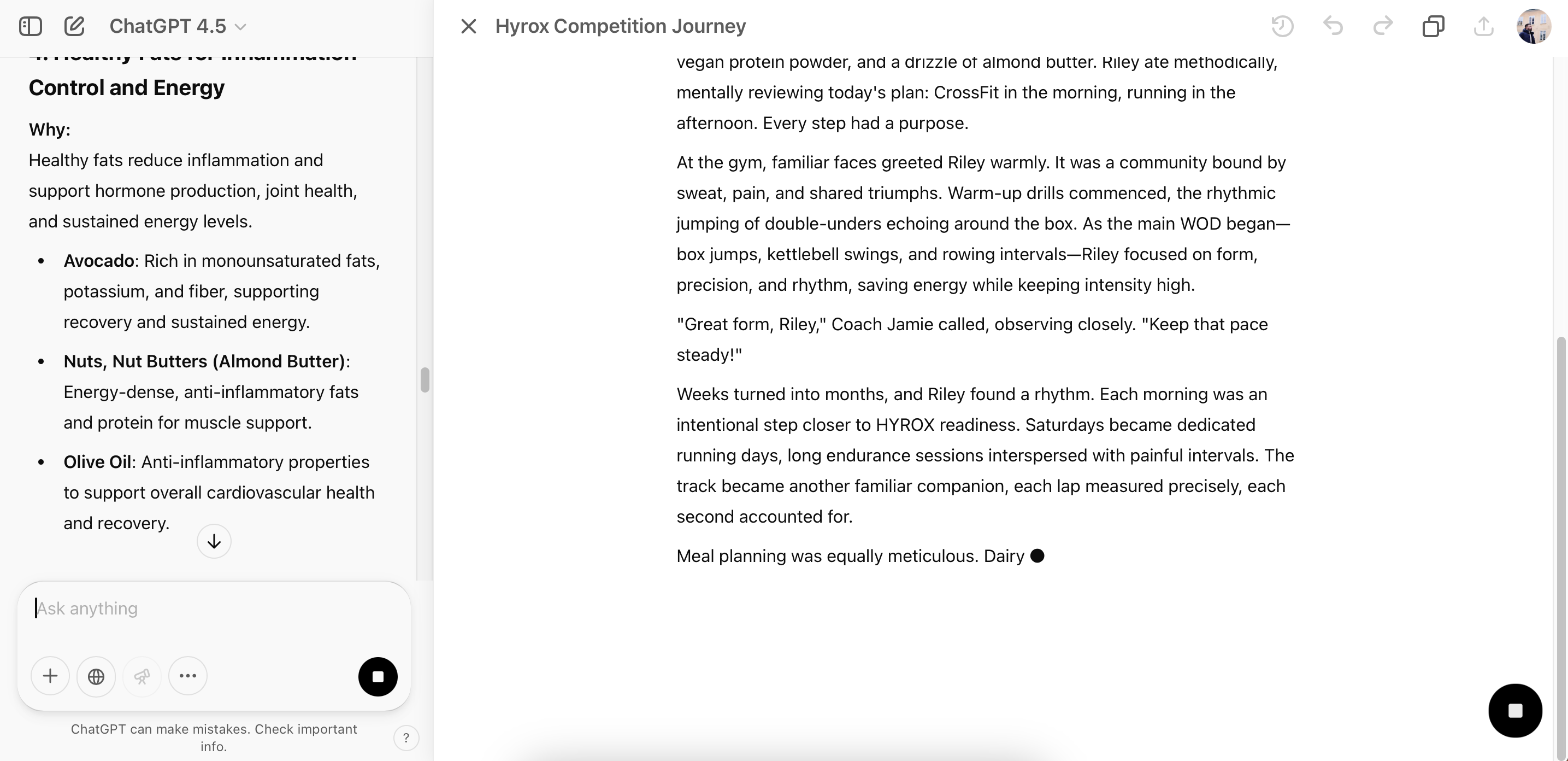

GP-4.5 Struggles With Longer Text

People have talked about ChatGPT’s writing skills ever since its inception; frankly, I’ve never been too impressed. Maybe I’m biased because I am a professional writer, but it’s so easy to tell when something has been written with AI.

Nonetheless, I’ll always have an open mind to embracing new technologies. As such, I was interested to see if ChatGPT could effectively write text. While it’s better for shorter text, I still don’t think the software is great for longer pieces of work. Others are also unconvinced by ChatGPT’s writing tool.

GPT-4.5 still feels too formulaic when writing longer text. Because it misunderstands prompts, I have sometimes needed to rewrite these. Moreover, while it’s better at non-fiction writing, it’s not very good at generating things that require actual human creativity. This text below, for example, has no depth to it at all.

Maybe my mind will change with future models, but I still won’t use ChatGPT to do my writing at this moment. To improve this, OpenAI needs to deliver more human input and a broader context.

3

Usage Limits Make Experimentation Difficult

Like ChatGPT’s Deep Research tool or using Sora for AI video creation, GPT-4.5 comes with usage limitations. While ChatGPT doesn’t explicitly state what this is, it’s around 45-50 prompts at the time of writing.

I use ChatGPT every day, mainly for experimentation. I can experiment because I have unlimited prompts with previous versions of its AI models. However, if I’m not allowed to explore many different avenues, I feel limited in what I can do.

I’d imagine the usage limit will be removed at some point, but it’s quite irritating for now. The simple improvement here would be to either increase the limit or give everyone unlimited prompts. Having a “[number] remaining” warning at all times would be nice until this happens, too.

2

GPT-4.5’s Responses Are Very Inconsistent

While ChatGPT 4.0 is by no means perfect, its responses are at least quite consistent. Before entering a prompt, I have a pretty good idea of whether I will need to rewrite it later. The same, however, cannot be said for GPT-4.5.

Some of the responses I get are pretty good, but others are not so good. In this respect, it’s a lot like DALL-E, OpenAI’s image-generation model. The software can handle simple requests but struggles with more comprehensive ones. Perhaps that’s what Deep Research is for, but you then run into the same prompt limitations as before, and Deep Research feels like overkill for some conversations.

I understand that new features like emotional intelligence will have teething problems. To improve, the software should try to balance acting more like a human and fully understanding each conversation. That way, it can build on the best GPT-4 features.

1

GPT-4.5 Struggles With File Uploads

This is more of a technical problem than an issue with prompting responses, but I’ve sometimes struggled to upload files when switching to GPT-4.5. Since I feel like these problems are where the software’s supposedly more advanced reasoning would shine, encountering them is very annoying.

I’ve had similar problems with Sora. When I first signed in, the app didn’t notice that I already had a ChatGPT Plus subscription. I expect the file upload problem will eventually be fixed; the sooner, the better.

I know that GPT-4.5 has a long way to go before it’s perfect, but I’m disappointed with the model in its current form. As with each of OpenAI’s previous models, it won’t take long before these kinks are ironed out, but GPT-4.5 won’t be my go-to for a while.